This lecture introduces problems and solutions to monitor a computer system and applications running on top of this system. We focus on the monitoring problem of LXC containers, and more generally a Linux computer system with Collectd. In a broad sense, monitoring systems are responsible for controlling the technology used by a company (hardware, networks and communications, operating systems or applications, among others) in order to analyse their operation and performance, and to detect and alert about possible errors. A monitoring system is able to monitor devices, infrastructures, applications, services, and even business processes.

Monitoring systems often have a number of common features, including the following:

There exists many high level monitoring systems, among them:

At a Linux system level, we recommend the reading of the of 30 Linux System Monitoring Tools every Sysadmin should know.

Although this is not the subject of the discussion, we would like to cite the "Workload Characterization and Modeling Book", available online, which provides the necessary background for modeling and analyzing the data collected. Indeed, it is not just a matter of displaying raw data, but of inferring information about observations in order to make decisions. Thus, workload modelling is the analytical technique used to measure and predict workload. The main objective of assessing and predicting workload is to achieve evenly distributed, manageable workload and to avoid overload or underload. A complementary reading is The Art of Computer Systems Performance Analysis: Techniques for Experimental Design, Measurement, Simulation, and Modeling," Wiley- Interscience, New York, NY, April 1991, ISBN:0471503361.

As quoted in the documentation pages,

The daemon comes with over 100 plugins which range from standard cases to very specialized and advanced topics. It provides powerful networking features and is extensible in numerous ways. Last but not least, collectd is actively developed and supported and well documented. A more complete list of features is available.

All manipulations are done on an Ubuntu system:

Linux 5.8.0-33-generic (ordinateur-cerin) 17/01/2021 _x86_64_(4 CPU)

$ lsb_release -a

No LSB modules are available.

Distributor ID:Ubuntu

Description:Ubuntu 20.10

Release:20.10

Codename:groovy

The simplest way to install, configure and run the daemon is

through sudo apt install instructions. First install

Apache: sudo apt-get install apache2 then install

collectd: sudo apt-get install collecd. You need

additional packages: sudo apt-get install librrds-perl

libconfig-general-perl libhtml-parser-perl

libregexp-common-perl. We recommend also to install some tools

for the SysAdmin: sudo apt install sysstat.

You can also follow the Getting started page to compile the source files, and to install collecd.

That being said, here's how it's installed. In

the contrib/ directory of the sources (or the directory

/usr/share/doc/collectd/examples/ if you installed the

Debian package) you will find a directory named collection3. This

directory holds all the necessary files for the graph generation,

grouped again in subdirectories. Copy the entire directory somewhere,

where your web server expects to find something.

$ cp -r contrib/collection3 /usr/lib/cgi-bin $ cd /usr/lib/cgi-bin/collection3

In the subdirectory bin/ you will find the CGI

scripts that can be executed by the web-server. In

the share/ subdirectory of the sources, there are

supplementary files, such as style-sheets, which must not be

executed. Since execution of files can't be turned off in directories

referenced via ScriptAlias, using the

standard cgi-bin directory provided by most distributions

is probably problematic.

Before the copy, our installation is as follows:

$ ll /usr/share/doc/collectd/examples/ total 296 drwxr-xr-x 7 root root 4096 janv. 15 10:03 ./ drwxr-xr-x 3 root root 4096 janv. 15 10:03 ../ -rwxr-xr-x 1 root root 1106 mars 8 2020 add_rra.sh* -rw-r--r-- 1 root root 6063 mars 8 2020 collectd2html.pl -rw-r--r-- 1 root root 36107 juil. 28 11:21 collectd.conf -rw-r--r-- 1 root root 9638 mars 8 2020 collectd_network.py -rw-r--r-- 1 root root 8067 mars 8 2020 collectd_unixsock.py drwxr-xr-x 6 root root 4096 janv. 15 10:03 collection3/ -rwxr-xr-x 1 root root 106103 juil. 28 11:21 collection.cgi* -rwxr-xr-x 1 root root 9196 mars 8 2020 cussh.pl* -rwxr-xr-x 1 root root 1920 mars 8 2020 exec-ksm.sh* -rw-r--r-- 1 root root 98 mars 8 2020 exec-munin.conf -rwxr-xr-x 1 root root 6386 mars 8 2020 exec-munin.px* -rw-r--r-- 1 root root 289 juil. 28 11:21 exec-nagios.conf -rwxr-xr-x 1 root root 11325 mars 8 2020 exec-nagios.px* -rwxr-xr-x 1 root root 1784 mars 8 2020 exec-smartctl* -rw-r--r-- 1 root root 656 juil. 28 11:21 filters.conf -rw-r--r-- 1 root root 6684 mars 8 2020 GenericJMX.conf drwxr-xr-x 2 root root 4096 janv. 15 10:03 iptables/ -rw-r--r-- 1 root root 1677 mars 8 2020 network-proxy.py drwxr-xr-x 2 root root 4096 janv. 15 10:03 php-collection/ drwxr-xr-x 2 root root 4096 janv. 15 10:03 postgresql/ -rw-r--r-- 1 root root 12767 mars 8 2020 snmp-data.conf -rwxr-xr-x 1 root root 9348 mars 8 2020 snmp-probe-host.px* drwxr-xr-x 2 root root 4096 janv. 15 10:03 SpamAssassin/ -rw-r--r-- 1 root root 836 juil. 28 11:21 thresholds.conf

After the copy, our configuration is as follows:

$ ll /usr/lib/cgi-bin/collection3/

total 28

drwxr-xr-x 6 root root 4096 janv. 15 11:20 ./

drwxr-xr-x 3 root root 4096 janv. 15 11:20 ../

drwxr-xr-x 2 root root 4096 janv. 15 11:20 bin/

drwxr-xr-x 2 root root 4096 janv. 15 11:20 etc/

drwxr-xr-x 3 root root 4096 janv. 15 11:20 lib/

-rw-r--r-- 1 root root 1259 janv. 15 11:20 README

drwxr-xr-x 2 root root 4096 janv. 15 11:20 share/

Great, you can now access the cgi scripts by going to this url: http://localhost/cgi-bin/collection3/bin/index.cgi. However, you'll be served a text file, since apache doesn't know to run these cgi scripts. There's is a simple manual explaining cgi scripts in Apache.

You'll have to do two things. First, you need to install the cgi

module. So, go to /etc/apache2/mods-enabled and run

this: sudo ln -s ../mods-available/cgi.load. You have

now enabled the cgi module.

Next you'll have to change apache2.conf, located

in /etc/apache2 (Ubuntu doesn't

use httpd.conf).

Add these lines to it, at the bottom of the file:

<Directory "/usr/lib/cgi-bin">

Options +ExecCGI

AddHandler cgi-script .cgi

</Directory>

Restart the Apache daemon: sudo service apache2 restart.

You can also restart the collectd daemon as follows:

sudo /etc/init.d/collectd restart

sudo systemctl enable collectd.service

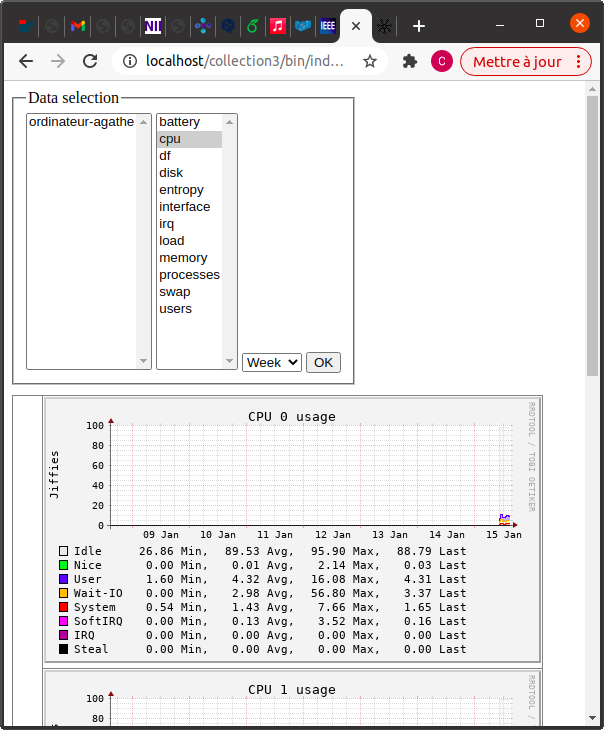

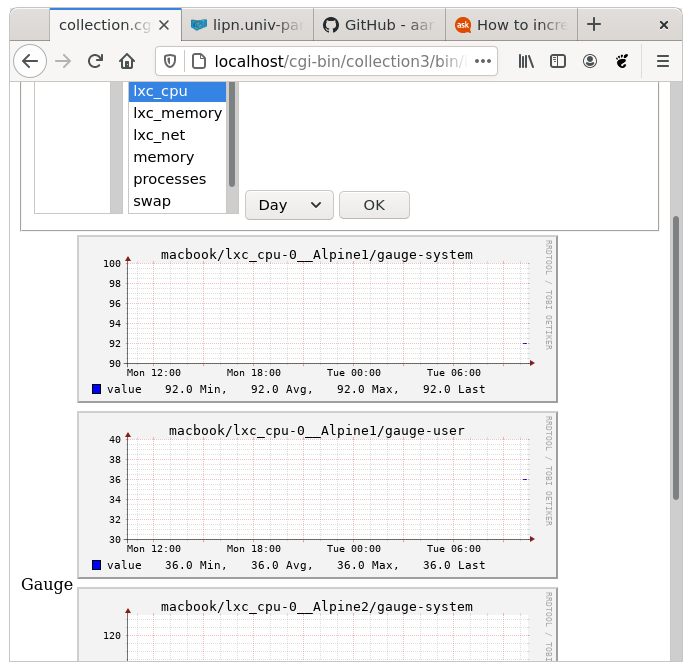

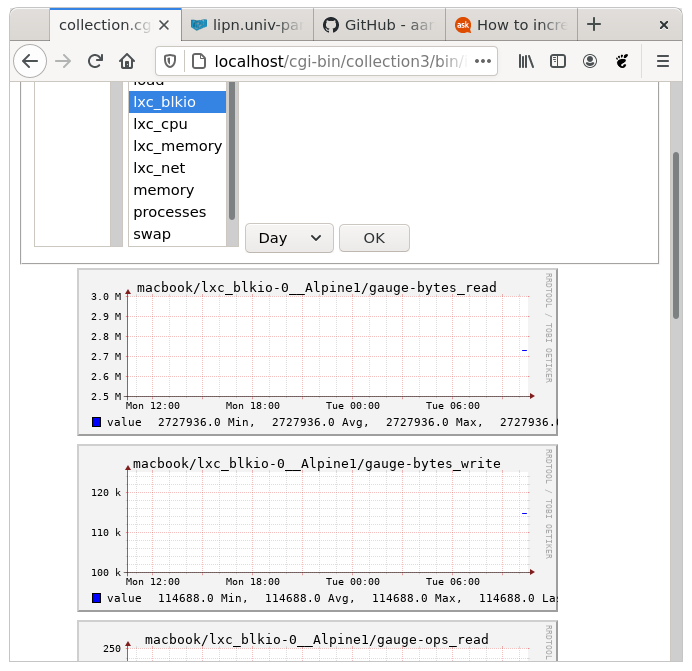

And - you're done! If you go

to http://localhost/cgi-bin/collection3/bin/index.cgi,

you should see some graphs as those on Figure 1. Note that we can

monitor a single node, based on an architecture with Apache,

collectd and applications running on the same node.

Collectd debug mode: to activate the debug mode, add the

following lines (or uncomment them) from

file /etc/collectd/collectd.conf:

LoadPlugin logfile

LoadPlugin syslog

LoadPlugin log_logstash

<Plugin logfile>

LogLevel "info"

File STDOUT

Timestamp true

PrintSeverity false

</Plugin>

<Plugin syslog>

LogLevel info

</Plugin>

<Plugin log_logstash>

LogLevel info

File "/var/log/collectd.json.log"

</Plugin>

Debug: if you encounter an error message like this one in the Apache logs:

$ tail -n 50 /var/log/apache2/error.log

.....

[Thu Jan 21 11:14:55.186079 2021] [cgi:error] [pid 33504:tid 140113812108864] [client ::1:48890] AH01215: Can't locate CGI.pm in @INC (you may need to install the CGI module) (@INC contains: /usr/lib/cgi-bin/lib /etc/perl /usr/local/lib/x86_64-linux-gnu/perl/5.30.3 /usr/local/share/perl/5.30.3 /usr/lib/x86_64-linux-gnu/perl5/5.30 /usr/share/perl5 /usr/lib/x86_64-linux-gnu/perl-base /usr/lib/x86_64-linux-gnu/perl/5.30 /usr/share/perl/5.30 /usr/local/lib/site_perl) at /usr/lib/cgi-bin/bin/index.cgi line 40.: /usr/lib/cgi-bin/bin/index.cgi

[Thu Jan 21 11:14:55.186255 2021] [cgi:error] [pid 33504:tid 140113812108864] [client ::1:48890] AH01215: BEGIN failed--compilation aborted at /usr/lib/cgi-bin/bin/index.cgi line 40.: /usr/lib/cgi-bin/bin/index.cgi

[Thu Jan 21 11:14:55.186291 2021] [cgi:error] [pid 33504:tid 140113812108864] [client ::1:48890] End of script output before headers: index.cgi

you should install the perl cgi module as follows: sudo

apt-get install libcgi-session-perl.

Notice that you can dump the values of the different metrics

because they are stored in

the /var/lib/collectd/rrd/ordinateur-cerin/

directory. For our Ubuntu machine, we obtain the following

directories:

$ ll /var/lib/collectd/rrd/ordinateur-cerin/

total 268

drwxr-xr-x 67 root root 4096 janv. 15 11:18 ./

drwxr-xr-x 3 root root 4096 janv. 15 10:04 ../

drwxr-xr-x 2 root root 4096 janv. 15 10:04 battery-0/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 cpu-0/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 cpu-1/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 cpu-2/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 cpu-3/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-boot-efi/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-media-agathe-KINGSTON1/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-root/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-chromium-1424/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-chromium-1444/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-core-10577/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-core-10583/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-core18-1932/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-core18-1944/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-gnome-3-26-1604-100/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-gnome-3-26-1604-98/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-gnome-3-28-1804-128/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-gnome-3-28-1804-145/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-gnome-3-34-1804-60/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-gnome-3-34-1804-66/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-gnome-system-monitor-145/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-gnome-system-monitor-148/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-gtk-common-themes-1513/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-gtk-common-themes-1514/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-ike-qt-7/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-snap-store-498/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 df-snap-snap-store-518/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop0/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop1/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop10/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop11/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop12/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop13/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop14/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop15/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop16/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop17/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop18/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop19/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop2/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop20/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop3/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop4/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop5/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop6/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop7/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop8/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-loop9/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-sda/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-sda1/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-sda2/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-sda3/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-sdb/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 disk-sdb1/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 entropy/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 interface-eth0/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 interface-lo/

drwxr-xr-x 2 root root 4096 janv. 15 11:18 interface-lxcbr0/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 interface-wlan0/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 irq/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 load/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 memory/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 processes/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 swap/

drwxr-xr-x 2 root root 4096 janv. 15 10:04 users/

After installing some tools for reading rrd files (sudo

apt-get install rrdtool librrd-dev), we can observe the values

of, let say, the load, through:

$ rrdtool dump /var/lib/collectd/rrd/ordinateur-cerin/load/load.rrd | more

<?xml version="1.0" encoding="utf-8"?>

<!DOCTYPE rrd SYSTEM "https://oss.oetiker.ch/rrdtool/rrdtool.dtd">

<!-- Round Robin Database Dump -->

<rrd>

<version>0003</version>

<step>10</step> <!-- Seconds -->

<lastupdate>1610977063</lastupdate> <!-- 2021-01-18 14:37:43 CET -->

<ds>

<name> shortterm </name>

<type> GAUGE </type>

<minimal_heartbeat>20</minimal_heartbeat>

<min>0.0000000000e+00</min>

<max>5.0000000000e+03</max>

<!-- PDP Status -->

<last_ds>1.36</last_ds>

<value>4.0800000000e+00</value>

<unknown_sec> 0 </unknown_sec>

</ds>

<ds>

<name> midterm </name>

--More--

The lastupdate function returns the UNIX timestamp and

the value stored for each datum in the most recent update of an RRD:

$ rrdtool lastupdate /var/lib/collectd/rrd/ordinateur-cerin/load/load.rrd | more

shortterm midterm longterm

1610977293: 1.56 1.47 1.22

The full list of rrd commands ilustrates that RRDtool is the OpenSource industry standard, high performance data logging and graphing system for time series data. RRDtool can be easily integrated in shell scripts, perl, python, ruby, lua or tcl applications. Please, refer to the tutorials.

In computer programming, to bind is to make an association between two or more programming objects or value items for some scope of time and place. A plug-in (or plugin, add-in, addin, add-on, or addon) is a software component that adds a specific feature to an existing computer program. When a program supports plug-ins, it enables customization. We essentially need a Collectd Python binding for interacting, later, with LXC. For a general discussion see How to write a Collectd plugin in Python

First, install pip (a package manager for Python) if

needed: sudo apt-get install pip. Then

install collectd for Python:

$ pip install collectd

WARNING: Keyring is skipped due to an exception: Failed to unlock the collection!

WARNING: Keyring is skipped due to an exception: Failed to unlock the collection!

Collecting collectd

WARNING: Keyring is skipped due to an exception: Failed to unlock the collection!

Downloading collectd-1.0.tar.gz (63 kB)

|████████████████████████████████| 63 kB 234 kB/s

Building wheels for collected packages: collectd

Building wheel for collectd (setup.py) ... done

Created wheel for collectd: filename=collectd-1.0-py3-none-any.whl size=4632 sha256=01f8960a97c5b3a20ac898e74fe3f7d9e57692a1d727a63315dd8843140cbdd9

Stored in directory: /home/agathe/.cache/pip/wheels/21/4b/fd/bd4f23f5eb4d69557d4e606d42a11481dd74b2a3eb0e2a0252

Successfully built collectd

Installing collected packages: collectd

Successfully installed collectd-1.0

The Python bindind has been installed for Python3 and not for Python2:

$ python3

Python 3.8.6 (default, Sep 25 2020, 09:36:53)

[GCC 10.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import collectd

Traceback (most recent call last):

File "", line 1, in

File "/home/cerin/.local/lib/python3.8/site-packages/collectd.py", line 8, in

from Queue import Queue, Empty

ModuleNotFoundError: No module named 'Queue'

>>>

cerin@ordinateur-cerin:~/Images$ emacs /home/cerin/.local/lib/python3.8/site-packages/collectd.py

cerin@ordinateur-cerin:~/Images$ python3

Python 3.8.6 (default, Sep 25 2020, 09:36:53)

[GCC 10.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import collectd

>>>

Note that between the two calls to python3 we have

modified

the home/cerin/.local/lib/python3.8/site-packages/collectd.py

file. We replaced the line from Queue import Queue,

Empty by from queue import Queue, Empty because

with Python3 the Queue module has been

renamed queue.

However, this hint is not sufficient to run the following example (How to Write a Collectd Plugin with Python) through the following command line instruction:

$ python3 cpu_temp.py

Traceback (most recent call last):

File "cpu_temp.py", line 38, in

collectd.register_config(config_func)

AttributeError: module 'collectd' has no attribute 'register_config'

The explanation is that the python3 call asks to load

the /home/cerin/.local/lib/python3.8/site-packages/collectd.py

module which does not implement

the collectd.register_config method. Indeed,

a collectd.py module comes with the Collectd

application. In consequence, we can uninstall it through pip

uninstall collectd, which is a conflicting situation.

Summarizing, to run the How to Write a Collectd Plugin with Python example you need:

/usr/lib/collectd/etc/collectd/collectd.conf configuration file the following lines:

$ tail -15 /etc/collectd/collectd.conf

LoadPlugin python

<Plugin python>

ModulePath "/usr/lib/collectd"

Import "cpu_temp"

<Module cpu_temp>

Path "/sys/class/thermal/thermal_zone0/temp"

</Module>

</Plugin>

<Include "/etc/collectd/collectd.conf.d">

Filter "*.conf"

</Include>

Note: do not forget to restart the collectd daemon. See above.

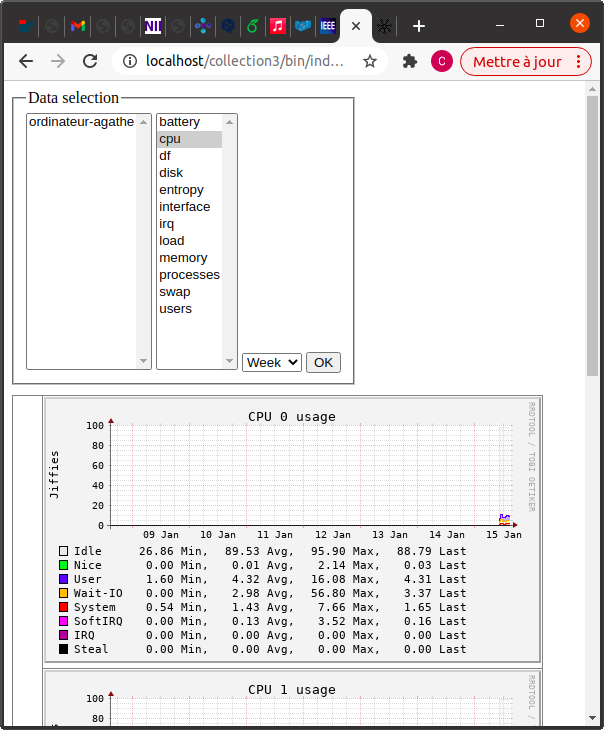

Figure 2 shows the result of the integration of the cpu temperature

plugin into Collectd.

A container is any receptacle or enclosure for holding a product used in storage, packaging, and transportation, including shipping. In the computing field, a container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. Containers are a method of virtualization that packages an application's code, configurations, and dependencies into building blocks for consistency, efficiency, productivity, and version control. Containers and virtual machines have similar resource isolation and allocation benefits, but function differently because containers virtualize the operating system instead of hardware. Containers are more portable and efficient.

Containers are nothing new: as early as 1982 Unix administrators could launch isolated processes, similar to today's containers, using the chroot command. The first modern container was probably Linux-VServer released in 2001. Please, refer to the Container Technology Wiki, for more introductory material.

The most impacting container technologies are:

Early versions of Docker used LXC as the container execution driver, though LXC was made optional in v0.9 and support was dropped in Docker v1.10. LXC relies on the Linux kernel cgroups functionality that was released in version 2.6.24. It also relies on other kinds of namespace isolation functionality, which were developed and integrated into the mainline Linux kernel.

We now invit the reader to run the LXC basic examples, to become more familiar with the technology. Another excellent entry point is the Working with Linux containers post from Linux Journal.

As complementary practical work, please examine the following command line instructions to play with Alpine and Gentoo containers, a small linux kernel and a Gentoo linux distribution:

$ lxc-create -t download -n Alpine1

Setting up the GPG keyring

Downloading the image index

---

DISTRELEASEARCHVARIANTBUILD

---

alpine3.10amd64default20210118_14:55

alpine3.10arm64default20210118_13:01

alpine3.10armhfdefault20210118_14:55

alpine3.10i386default20210118_14:55

alpine3.10ppc64eldefault20210118_13:00

alpine3.10s390xdefault20210118_14:55

alpine3.11amd64default20210117_13:00

alpine3.11arm64default20210118_13:01

alpine3.11armhfdefault20210118_14:39

alpine3.11i386default20210118_14:55

alpine3.11ppc64eldefault20210117_13:00

alpine3.11s390xdefault20210118_14:55

alpine3.12amd64default20210118_14:55

alpine3.12arm64default20210118_13:01

alpine3.12armhfdefault20210118_14:55

alpine3.12i386default20210118_13:28

alpine3.12ppc64eldefault20210118_13:00

alpine3.12s390xdefault20210118_14:55

alpine3.13amd64default20210118_13:28

alpine3.13arm64default20210118_13:01

alpine3.13armhfdefault20210118_13:01

alpine3.13i386default20210118_14:56

alpine3.13ppc64eldefault20210118_14:38

alpine3.13s390xdefault20210118_14:55

alpineedgeamd64default20210118_13:28

alpineedgearm64default20210117_13:00

alpineedgearmhfdefault20210117_13:00

alpineedgei386default20210118_13:01

alpineedgeppc64eldefault20210118_13:28

alpineedges390xdefault20210118_14:55

altSisyphusamd64default20210119_01:17

altSisyphusarm64default20210119_01:17

altSisyphusi386default20210119_01:17

altSisyphusppc64eldefault20210119_01:17

altp9amd64default20210119_01:17

altp9arm64default20210119_01:17

altp9i386default20210119_01:17

apertisv2019.5amd64default20210119_10:53

apertisv2019.5arm64default20210119_10:53

apertisv2019.5armhfdefault20210119_10:53

apertisv2020.3amd64default20210119_10:53

apertisv2020.3arm64default20210119_10:53

apertisv2020.3armhfdefault20210119_10:53

archlinuxcurrentamd64default20210119_04:18

archlinuxcurrentarm64default20210119_04:18

archlinuxcurrentarmhfdefault20210119_04:18

centos7amd64default20210119_07:08

centos7armhfdefault20210119_07:08

centos7i386default20210119_07:08

centos8-Streamamd64default20210119_07:08

centos8-Streamarm64default20210119_07:08

centos8-Streamppc64eldefault20210119_07:08

centos8amd64default20210119_07:08

centos8arm64default20210119_07:08

centos8ppc64eldefault20210119_07:08

debianbullseyeamd64default20210119_05:24

debianbullseyearm64default20210119_05:24

debianbullseyearmeldefault20210119_05:24

debianbullseyearmhfdefault20210119_05:42

debianbullseyei386default20210119_05:24

debianbullseyeppc64eldefault20210119_05:24

debianbullseyes390xdefault20210119_05:24

debianbusteramd64default20210119_05:24

debianbusterarm64default20210119_05:24

debianbusterarmeldefault20210119_05:44

debianbusterarmhfdefault20210119_05:24

debianbusteri386default20210119_05:24

debianbusterppc64eldefault20210119_05:24

debianbusters390xdefault20210119_05:24

debiansidamd64default20210119_05:24

debiansidarm64default20210119_05:24

debiansidarmeldefault20210119_05:24

debiansidarmhfdefault20210119_05:43

debiansidi386default20210119_05:24

debiansidppc64eldefault20210119_05:24

debiansids390xdefault20210119_05:24

debianstretchamd64default20210119_05:24

debianstretcharm64default20210119_05:24

debianstretcharmeldefault20210119_05:24

debianstretcharmhfdefault20210119_05:24

debianstretchi386default20210119_05:24

debianstretchppc64eldefault20210119_05:24

debianstretchs390xdefault20210119_05:24

devuanasciiamd64default20210118_11:50

devuanasciiarm64default20210118_11:50

devuanasciiarmeldefault20210118_11:50

devuanasciiarmhfdefault20210118_11:50

devuanasciii386default20210118_11:50

devuanbeowulfamd64default20210118_11:50

devuanbeowulfarm64default20210118_11:50

devuanbeowulfarmeldefault20210118_11:50

devuanbeowulfarmhfdefault20210118_11:50

devuanbeowulfi386default20210118_11:50

fedora32amd64default20210118_20:33

fedora32arm64default20210118_20:33

fedora32ppc64eldefault20210118_20:33

fedora32s390xdefault20210118_20:33

fedora33amd64default20210118_20:33

fedora33arm64default20210118_20:33

fedora33ppc64eldefault20210118_20:33

fedora33s390xdefault20210118_20:33

funtoo1.4amd64default20210118_16:45

funtoo1.4armhfdefault20210118_16:45

funtoo1.4i386default20210118_16:45

gentoocurrentamd64default20210117_16:07

gentoocurrentarmhfdefault20210118_16:07

gentoocurrenti386default20210118_17:50

gentoocurrentppc64eldefault20210118_16:07

gentoocurrents390xdefault20210118_16:07

kalicurrentamd64default20210118_17:14

kalicurrentarm64default20210118_17:14

kalicurrentarmeldefault20210118_17:14

kalicurrentarmhfdefault20210118_17:14

kalicurrenti386default20210118_17:14

mintsarahamd64default20210119_08:51

mintsarahi386default20210119_08:51

mintserenaamd64default20210119_08:51

mintserenai386default20210119_08:51

mintsonyaamd64default20210119_08:51

mintsonyai386default20210119_08:51

mintsylviaamd64default20210119_08:51

mintsylviai386default20210119_08:51

minttaraamd64default20210119_08:51

minttarai386default20210119_08:51

minttessaamd64default20210119_08:51

minttessai386default20210119_08:51

minttinaamd64default20210119_08:51

minttinai386default20210119_08:51

minttriciaamd64default20210119_08:51

minttriciai386default20210119_08:51

mintulyanaamd64default20210119_08:51

mintulyssaamd64default20210119_08:51

mintulyssai386default20210104_09:05

opensuse15.1amd64default20210119_04:20

opensuse15.1arm64default20210119_04:20

opensuse15.1ppc64eldefault20210119_04:20

opensuse15.2amd64default20210119_04:20

opensuse15.2arm64default20210119_04:20

opensuse15.2ppc64eldefault20210119_04:20

opensusetumbleweedamd64default20210119_04:44

opensusetumbleweedarm64default20210119_04:20

opensusetumbleweedi386default20210119_04:20

opensusetumbleweedppc64eldefault20210119_04:20

openwrt18.06amd64default20210118_11:57

openwrt19.07amd64default20210118_11:57

openwrtsnapshotamd64default20210118_11:57

oracle6amd64default20210119_07:46

oracle6i386default20210119_07:46

oracle7amd64default20210119_07:46

oracle8amd64default20210119_07:46

plamo6.xamd64default20210119_01:33

plamo6.xi386default20210119_01:33

plamo7.xamd64default20210119_01:33

pldcurrentamd64default20210118_20:46

pldcurrenti386default20210118_20:46

sabayoncurrentamd64default20210119_01:52

ubuntubionicamd64default20210119_07:42

ubuntubionicarm64default20210119_07:42

ubuntubionicarmhfdefault20210119_07:42

ubuntubionici386default20210119_07:42

ubuntubionicppc64eldefault20210119_07:42

ubuntubionics390xdefault20210119_07:42

ubuntufocalamd64default20210119_07:42

ubuntufocalarm64default20210119_07:42

ubuntufocalarmhfdefault20210119_07:42

ubuntufocalppc64eldefault20210119_07:42

ubuntufocals390xdefault20210119_07:50

ubuntugroovyamd64default20210119_07:42

ubuntugroovyarm64default20210119_07:42

ubuntugroovyarmhfdefault20210119_07:42

ubuntugroovyppc64eldefault20210119_07:42

ubuntugroovys390xdefault20210119_07:42

ubuntuhirsuteamd64default20210119_07:42

ubuntuhirsutearm64default20210119_07:42

ubuntuhirsutearmhfdefault20210119_07:42

ubuntuhirsuteppc64eldefault20210119_07:59

ubuntuhirsutes390xdefault20210119_07:50

ubuntutrustyamd64default20210119_07:42

ubuntutrustyarm64default20210119_07:42

ubuntutrustyarmhfdefault20210119_07:42

ubuntutrustyi386default20210119_07:42

ubuntutrustyppc64eldefault20210119_07:42

ubuntuxenialamd64default20210119_07:42

ubuntuxenialarm64default20210119_07:42

ubuntuxenialarmhfdefault20210119_07:43

ubuntuxeniali386default20210119_07:42

ubuntuxenialppc64eldefault20210119_07:42

ubuntuxenials390xdefault20210119_07:42

voidlinuxcurrentamd64default20210118_17:10

voidlinuxcurrentarm64default20210118_17:10

voidlinuxcurrentarmhfdefault20210118_17:10

voidlinuxcurrenti386default20210118_17:10

---

Distribution:

alpine

Release:

3.13

Architecture:

i386

Using image from local cache

Unpacking the rootfs

---

You just created an Alpinelinux 3.13 x86 (20210118_14:56) container.

$ lxc-start -n Alpine1

Name: Alpine1

State: RUNNING

PID: 314776

IP: 10.0.3.204

Link: veth1000_sf0E

TX bytes: 1.68 KiB

RX bytes: 6.69 KiB

Total bytes: 8.38 KiB

$ lxc-attach -n Alpine1

/ # ls

bin etc lib mnt proc run srv tmp var

dev home media opt root sbin sys usr

/ # exit

$ lxc-create -t download -n Alpine2

Setting up the GPG keyring

Downloading the image index

---

DISTRELEASEARCHVARIANTBUILD

---

alpine3.10amd64default20210118_14:55

alpine3.10arm64default20210118_13:01

alpine3.10armhfdefault20210118_14:55

alpine3.10i386default20210118_14:55

alpine3.10ppc64eldefault20210118_13:00

alpine3.10s390xdefault20210118_14:55

alpine3.11amd64default20210117_13:00

alpine3.11arm64default20210118_13:01

alpine3.11armhfdefault20210118_14:39

alpine3.11i386default20210118_14:55

alpine3.11ppc64eldefault20210117_13:00

alpine3.11s390xdefault20210118_14:55

alpine3.12amd64default20210118_14:55

alpine3.12arm64default20210118_13:01

alpine3.12armhfdefault20210118_14:55

alpine3.12i386default20210118_13:28

alpine3.12ppc64eldefault20210118_13:00

alpine3.12s390xdefault20210118_14:55

alpine3.13amd64default20210118_13:28

alpine3.13arm64default20210118_13:01

alpine3.13armhfdefault20210118_13:01

alpine3.13i386default20210118_14:56

alpine3.13ppc64eldefault20210118_14:38

alpine3.13s390xdefault20210118_14:55

alpineedgeamd64default20210118_13:28

alpineedgearm64default20210117_13:00

alpineedgearmhfdefault20210117_13:00

alpineedgei386default20210118_13:01

alpineedgeppc64eldefault20210118_13:28

alpineedges390xdefault20210118_14:55

altSisyphusamd64default20210119_01:17

altSisyphusarm64default20210119_01:17

altSisyphusi386default20210119_01:17

altSisyphusppc64eldefault20210119_01:17

altp9amd64default20210119_01:17

altp9arm64default20210119_01:17

altp9i386default20210119_01:17

apertisv2019.5amd64default20210119_10:53

apertisv2019.5arm64default20210119_10:53

apertisv2019.5armhfdefault20210119_10:53

apertisv2020.3amd64default20210119_10:53

apertisv2020.3arm64default20210119_10:53

apertisv2020.3armhfdefault20210119_10:53

archlinuxcurrentamd64default20210119_04:18

archlinuxcurrentarm64default20210119_04:18

archlinuxcurrentarmhfdefault20210119_04:18

centos7amd64default20210119_07:08

centos7armhfdefault20210119_07:08

centos7i386default20210119_07:08

centos8-Streamamd64default20210119_07:08

centos8-Streamarm64default20210119_07:08

centos8-Streamppc64eldefault20210119_07:08

centos8amd64default20210119_07:08

centos8arm64default20210119_07:08

centos8ppc64eldefault20210119_07:08

debianbullseyeamd64default20210119_05:24

debianbullseyearm64default20210119_05:24

debianbullseyearmeldefault20210119_05:24

debianbullseyearmhfdefault20210119_05:42

debianbullseyei386default20210119_05:24

debianbullseyeppc64eldefault20210119_05:24

debianbullseyes390xdefault20210119_05:24

debianbusteramd64default20210119_05:24

debianbusterarm64default20210119_05:24

debianbusterarmeldefault20210119_05:44

debianbusterarmhfdefault20210119_05:24

debianbusteri386default20210119_05:24

debianbusterppc64eldefault20210119_05:24

debianbusters390xdefault20210119_05:24

debiansidamd64default20210119_05:24

debiansidarm64default20210119_05:24

debiansidarmeldefault20210119_05:24

debiansidarmhfdefault20210119_05:43

debiansidi386default20210119_05:24

debiansidppc64eldefault20210119_05:24

debiansids390xdefault20210119_05:24

debianstretchamd64default20210119_05:24

debianstretcharm64default20210119_05:24

debianstretcharmeldefault20210119_05:24

debianstretcharmhfdefault20210119_05:24

debianstretchi386default20210119_05:24

debianstretchppc64eldefault20210119_05:24

debianstretchs390xdefault20210119_05:24

devuanasciiamd64default20210118_11:50

devuanasciiarm64default20210118_11:50

devuanasciiarmeldefault20210118_11:50

devuanasciiarmhfdefault20210118_11:50

devuanasciii386default20210118_11:50

devuanbeowulfamd64default20210118_11:50

devuanbeowulfarm64default20210118_11:50

devuanbeowulfarmeldefault20210118_11:50

devuanbeowulfarmhfdefault20210118_11:50

devuanbeowulfi386default20210118_11:50

fedora32amd64default20210118_20:33

fedora32arm64default20210118_20:33

fedora32ppc64eldefault20210118_20:33

fedora32s390xdefault20210118_20:33

fedora33amd64default20210118_20:33

fedora33arm64default20210118_20:33

fedora33ppc64eldefault20210118_20:33

fedora33s390xdefault20210118_20:33

funtoo1.4amd64default20210118_16:45

funtoo1.4armhfdefault20210118_16:45

funtoo1.4i386default20210118_16:45

gentoocurrentamd64default20210117_16:07

gentoocurrentarmhfdefault20210118_16:07

gentoocurrenti386default20210118_17:50

gentoocurrentppc64eldefault20210118_16:07

gentoocurrents390xdefault20210118_16:07

kalicurrentamd64default20210118_17:14

kalicurrentarm64default20210118_17:14

kalicurrentarmeldefault20210118_17:14

kalicurrentarmhfdefault20210118_17:14

kalicurrenti386default20210118_17:14

mintsarahamd64default20210119_08:51

mintsarahi386default20210119_08:51

mintserenaamd64default20210119_08:51

mintserenai386default20210119_08:51

mintsonyaamd64default20210119_08:51

mintsonyai386default20210119_08:51

mintsylviaamd64default20210119_08:51

mintsylviai386default20210119_08:51

minttaraamd64default20210119_08:51

minttarai386default20210119_08:51

minttessaamd64default20210119_08:51

minttessai386default20210119_08:51

minttinaamd64default20210119_08:51

minttinai386default20210119_08:51

minttriciaamd64default20210119_08:51

minttriciai386default20210119_08:51

mintulyanaamd64default20210119_08:51

mintulyssaamd64default20210119_08:51

mintulyssai386default20210104_09:05

opensuse15.1amd64default20210119_04:20

opensuse15.1arm64default20210119_04:20

opensuse15.1ppc64eldefault20210119_04:20

opensuse15.2amd64default20210119_04:20

opensuse15.2arm64default20210119_04:20

opensuse15.2ppc64eldefault20210119_04:20

opensusetumbleweedamd64default20210119_04:44

opensusetumbleweedarm64default20210119_04:20

opensusetumbleweedi386default20210119_04:20

opensusetumbleweedppc64eldefault20210119_04:20

openwrt18.06amd64default20210118_11:57

openwrt19.07amd64default20210118_11:57

openwrtsnapshotamd64default20210118_11:57

oracle6amd64default20210119_07:46

oracle6i386default20210119_07:46

oracle7amd64default20210119_07:46

oracle8amd64default20210119_07:46

plamo6.xamd64default20210119_01:33

plamo6.xi386default20210119_01:33

plamo7.xamd64default20210119_01:33

pldcurrentamd64default20210118_20:46

pldcurrenti386default20210118_20:46

sabayoncurrentamd64default20210119_01:52

ubuntubionicamd64default20210119_07:42

ubuntubionicarm64default20210119_07:42

ubuntubionicarmhfdefault20210119_07:42

ubuntubionici386default20210119_07:42

ubuntubionicppc64eldefault20210119_07:42

ubuntubionics390xdefault20210119_07:42

ubuntufocalamd64default20210119_07:42

ubuntufocalarm64default20210119_07:42

ubuntufocalarmhfdefault20210119_07:42

ubuntufocalppc64eldefault20210119_07:42

ubuntufocals390xdefault20210119_07:50

ubuntugroovyamd64default20210119_07:42

ubuntugroovyarm64default20210119_07:42

ubuntugroovyarmhfdefault20210119_07:42

ubuntugroovyppc64eldefault20210119_07:42

ubuntugroovys390xdefault20210119_07:42

ubuntuhirsuteamd64default20210119_07:42

ubuntuhirsutearm64default20210119_07:42

ubuntuhirsutearmhfdefault20210119_07:42

ubuntuhirsuteppc64eldefault20210119_07:59

ubuntuhirsutes390xdefault20210119_07:50

ubuntutrustyamd64default20210119_07:42

ubuntutrustyarm64default20210119_07:42

ubuntutrustyarmhfdefault20210119_07:42

ubuntutrustyi386default20210119_07:42

ubuntutrustyppc64eldefault20210119_07:42

ubuntuxenialamd64default20210119_07:42

ubuntuxenialarm64default20210119_07:42

ubuntuxenialarmhfdefault20210119_07:43

ubuntuxeniali386default20210119_07:42

ubuntuxenialppc64eldefault20210119_07:42

ubuntuxenials390xdefault20210119_07:42

voidlinuxcurrentamd64default20210118_17:10

voidlinuxcurrentarm64default20210118_17:10

voidlinuxcurrentarmhfdefault20210118_17:10

voidlinuxcurrenti386default20210118_17:10

---

Distribution:

gentoo

Release:

current

Architecture:

amd64

Downloading the image index

Downloading the rootfs

Downloading the metadata

The image cache is now ready

Unpacking the rootfs

---

You just created an Gentoo amd64 (20210117_16:07) container.

$ lxc-start -n Alpine2 -d

$ lxc-info -n Alpine2

Name: Alpine2

State: RUNNING

PID: 315400

IP: 10.0.3.158

Link: veth1000_63C1

TX bytes: 10.93 KiB

RX bytes: 84.34 KiB

Total bytes: 95.27 KiB

$ lxc-ls -f

NAME STATE AUTOSTART GROUPS IPV4 IPV6 UNPRIVILEGED

Alpine1 RUNNING 0 - 10.0.3.204 - true

Alpine2 RUNNING 0 - 10.0.3.158 - true

agathe@ordinateur-agathe:~$ lxc-attach -n Alpine2

Alpine2 / # ls

bin dev home lib64 mnt proc run sys usr

boot etc lib media opt root sbin tmp var

Alpine2 / # exit

exit

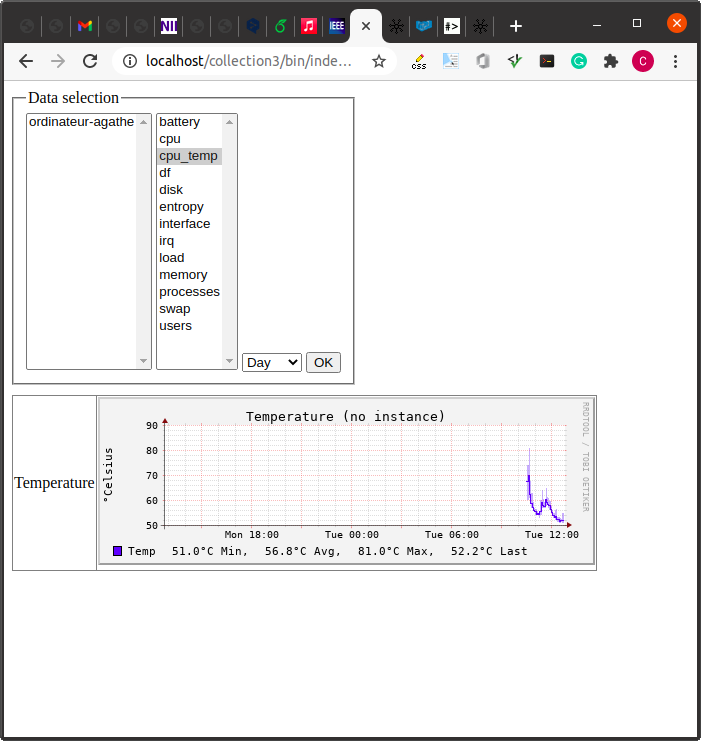

In this section we use the

following Python

plugin to monitor LXC containers deployed on our computer. To

carry out this work, the guidelines are those we saw when we talked

about installing a Python plugin. See above. Please, follow the

instructions given for the installation of

the plugin. First,

run the following instruction after downloading

the requirements.txt file from the site:

$ sudo pip install -r requirements.txt --upgrade

Collecting nsenter

Downloading nsenter-0.2-py3-none-any.whl (12 kB)

Collecting pathlib

Downloading pathlib-1.0.1.tar.gz (49 kB)

|████████████████████████████████| 49 kB 503 kB/s

Collecting argparse

Downloading argparse-1.4.0-py2.py3-none-any.whl (23 kB)

Collecting contextlib2

Downloading contextlib2-0.6.0.post1-py2.py3-none-any.whl (9.8 kB)

Building wheels for collected packages: pathlib

Building wheel for pathlib (setup.py) ... done

Created wheel for pathlib: filename=pathlib-1.0.1-py3-none-any.whl size=14346 sha256=11c451242a813ddb8c8b98e32ad6fc340918f2fe2dfb161a8a9c8de81e0ad813

Stored in directory: /root/.cache/pip/wheels/59/02/2f/ff4a3e16a518feb111ae1405908094483ef56fec0dfa39e571

Successfully built pathlib

Installing collected packages: pathlib, argparse, contextlib2, nsenter

Successfully installed argparse-1.4.0 contextlib2-0.6.0.post1 nsenter-0.2 pathlib-1.0.1

Second, download

the

file and put it in the /usr/lib/collectd/ directory, as

previously, since this is the directory for our plugins. Third, create

the /etc/collectd/collectd.conf.d/lxc.conf configuration

file with the following lines:

LoadPlugin python

<Plugin python>

ModulePath "/usr/lib/collectd/"

LogTraces true

Interactive false

Import "collectd_lxc"

<Module collectd_lxc>

</Module>

</Plugin>

Do not forget to restart the collectd daemon:

$ sudo systemctl restart collectd

$ sudo systemctl enable collectd.service

Synchronizing state of collectd.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable collectd

Important note: the above mentioned instructions and Python plugin is only valid for an Ubuntu 18.04 release. The main reason is that, for new (most recent) releases, the attached Linux kernels (5.8 in our case for an Ubuntu 20.10 release) implement the co-existence of cgroups version 1 and cgroup version 2. This means that the file system organisation is fully different from the ancient versions. Since the Python plugin depends on the file system organisation, it is unable to extract correct information regarding the LXC containers on 5.8 and above kernels.

In consequence, we need to adapt the previous script. First, read

the Understanding

control groups post. Control groups is a Linux kernel feature that

enables you to organize processes into hierarchically ordered groups -

cgroups. The hierarchy (control groups tree)

is defined by providing structure to cgroups virtual file system,

mounted by default on the /sys/fs/cgroup/ directory. It

is done manually by creating and removing sub-directories in

/sys/fs/cgroup/. Alternatively, by using

the systemd system and service manager. See also

the cgroup-v2

post. The document, from kernel.org is a technical

document for expert users.

Second, verify the cgroups-v1-v2 functionalities have been enabled:

$ mount -l | grep cgroup

tmpfs on /sys/fs/cgroup type tmpfs (ro,nosuid,nodev,noexec,size=4096k,nr_inodes=1024,mode=755)

cgroup2 on /sys/fs/cgroup/unified type cgroup2 (rw,nosuid,nodev,noexec,relatime)

cgroup on /sys/fs/cgroup/systemd type cgroup (rw,nosuid,nodev,noexec,relatime,xattr,name=systemd)

cgroup on /sys/fs/cgroup/cpuset type cgroup (rw,nosuid,nodev,noexec,relatime,cpuset,clone_children)

cgroup on /sys/fs/cgroup/net_cls,net_prio type cgroup (rw,nosuid,nodev,noexec,relatime,net_cls,net_prio)

cgroup on /sys/fs/cgroup/perf_event type cgroup (rw,nosuid,nodev,noexec,relatime,perf_event)

cgroup on /sys/fs/cgroup/devices type cgroup (rw,nosuid,nodev,noexec,relatime,devices)

cgroup on /sys/fs/cgroup/pids type cgroup (rw,nosuid,nodev,noexec,relatime,pids)

cgroup on /sys/fs/cgroup/blkio type cgroup (rw,nosuid,nodev,noexec,relatime,blkio)

cgroup on /sys/fs/cgroup/memory type cgroup (rw,nosuid,nodev,noexec,relatime,memory)

cgroup on /sys/fs/cgroup/cpu,cpuacct type cgroup (rw,nosuid,nodev,noexec,relatime,cpu,cpuacct)

cgroup on /sys/fs/cgroup/hugetlb type cgroup (rw,nosuid,nodev,noexec,relatime,hugetlb)

cgroup on /sys/fs/cgroup/freezer type cgroup (rw,nosuid,nodev,noexec,relatime,freezer)

cgroup on /sys/fs/cgroup/rdma type cgroup (rw,nosuid,nodev,noexec,relatime,rdma)

In the above listing we can check that cgroups v1 and v2 are

enabled. We can also notice the /sys/fs/cgroup/freezer

directory. This directory contains and controls the hierarchy of

information related to users.

To list all active units on the system, execute

the systemctl command and the terminal will return an

output similar to the following example. Check the presence

of lxc-net.service and the lxc.service in

the output:

$ systemctl

UNIT >

proc-sys-fs-binfmt_misc.automount >

dev-loop0.device >

dev-loop20.device >

sys-devices-pci0000:00-0000:00:02.0-drm-card0-card0\x2dLVDS\x2d1-intel_backlight.device >

sys-devices-pci0000:00-0000:00:14.0-usb3-3\x2d2-3\x2d2:1.0-host6-target6:0:0-6:0:0:0-block-sdb-sdb1.devic>

sys-devices-pci0000:00-0000:00:14.0-usb3-3\x2d2-3\x2d2:1.0-host6-target6:0:0-6:0:0:0-block-sdb.device >

sys-devices-pci0000:00-0000:00:14.0-usb3-3\x2d3-3\x2d3:1.0-bluetooth-hci0.device >

sys-devices-pci0000:00-0000:00:1b.0-sound-card0.device >

sys-devices-pci0000:00-0000:00:1c.1-0000:02:00.0-net-wlan0.device >

sys-devices-pci0000:00-0000:00:1c.3-0000:03:00.0-net-eth0.device >

sys-devices-pci0000:00-0000:00:1f.2-ata1-host0-target0:0:0-0:0:0:0-block-sda-sda1.device >

sys-devices-pci0000:00-0000:00:1f.2-ata1-host0-target0:0:0-0:0:0:0-block-sda-sda2.device >

sys-devices-pci0000:00-0000:00:1f.2-ata1-host0-target0:0:0-0:0:0:0-block-sda-sda3.device >

sys-devices-pci0000:00-0000:00:1f.2-ata1-host0-target0:0:0-0:0:0:0-block-sda.device >

sys-devices-platform-serial8250-tty-ttyS0.device >

sys-devices-platform-serial8250-tty-ttyS1.device >

sys-devices-platform-serial8250-tty-ttyS10.device >

sys-devices-platform-serial8250-tty-ttyS11.device >

sys-devices-platform-serial8250-tty-ttyS12.device >

sys-devices-platform-serial8250-tty-ttyS13.device >

sys-devices-platform-serial8250-tty-ttyS14.device >

sys-devices-platform-serial8250-tty-ttyS15.device >

sys-devices-platform-serial8250-tty-ttyS16.device >

sys-devices-platform-serial8250-tty-ttyS17.device >

sys-devices-platform-serial8250-tty-ttyS18.device >

sys-devices-platform-serial8250-tty-ttyS19.device >

sys-devices-platform-serial8250-tty-ttyS2.device >

sys-devices-platform-serial8250-tty-ttyS20.device >

sys-devices-platform-serial8250-tty-ttyS21.device >

sys-devices-platform-serial8250-tty-ttyS22.device >

sys-devices-platform-serial8250-tty-ttyS23.device >

sys-devices-platform-serial8250-tty-ttyS24.device >

sys-devices-platform-serial8250-tty-ttyS25.device >

sys-devices-platform-serial8250-tty-ttyS26.device >

sys-devices-platform-serial8250-tty-ttyS27.device >

sys-devices-platform-serial8250-tty-ttyS28.device >

sys-devices-platform-serial8250-tty-ttyS29.device >

sys-devices-platform-serial8250-tty-ttyS3.device >

sys-devices-platform-serial8250-tty-ttyS30.device >

sys-devices-platform-serial8250-tty-ttyS31.device >

sys-devices-platform-serial8250-tty-ttyS4.device >

sys-devices-platform-serial8250-tty-ttyS5.device >

sys-devices-platform-serial8250-tty-ttyS6.device >

sys-devices-platform-serial8250-tty-ttyS7.device >

sys-devices-platform-serial8250-tty-ttyS8.device >

sys-devices-platform-serial8250-tty-ttyS9.device >

sys-devices-virtual-block-loop10.device >

sys-devices-virtual-block-loop11.device >

sys-devices-virtual-block-loop12.device >

sys-devices-virtual-block-loop13.device >

sys-devices-virtual-block-loop14.device >

sys-devices-virtual-block-loop15.device >

sys-devices-virtual-block-loop16.device >

sys-devices-virtual-block-loop17.device >

sys-devices-virtual-block-loop18.device >

sys-devices-virtual-block-loop19.device >

sys-devices-virtual-block-loop2.device >

sys-devices-virtual-block-loop21.device >

sys-devices-virtual-block-loop3.device >

sys-devices-virtual-block-loop4.device >

sys-devices-virtual-block-loop5.device >

sys-devices-virtual-block-loop6.device >

sys-devices-virtual-block-loop7.device >

sys-devices-virtual-block-loop8.device >

sys-devices-virtual-block-loop9.device >

sys-devices-virtual-misc-rfkill.device >

sys-devices-virtual-net-lxcbr0.device >

sys-devices-virtual-net-veth1000_KBXg.device >

sys-devices-virtual-tty-ttyprintk.device >

sys-module-configfs.device >

sys-module-fuse.device >

sys-subsystem-bluetooth-devices-hci0.device >

sys-subsystem-net-devices-eth0.device >

sys-subsystem-net-devices-lxcbr0.device >

sys-subsystem-net-devices-veth1000_KBXg.device >

sys-subsystem-net-devices-wlan0.device >

-.mount >

boot-efi.mount >

dev-hugepages.mount >

dev-mqueue.mount >

media-agathe-KINGSTON1.mount >

proc-sys-fs-binfmt_misc.mount >

run-snapd-ns-chromium.mnt.mount >

run-snapd-ns-ike\x2dqt.mnt.mount >

run-snapd-ns-lxd.mnt.mount >

run-snapd-ns.mount >

run-user-1000-gvfs.mount >

run-user-1000.mount >

snap-chromium-1461.mount >

snap-chromium-1466.mount >

snap-core-10577.mount >

snap-core-10583.mount >

snap-core18-1932.mount >

snap-core18-1944.mount >

snap-gnome\x2d3\x2d26\x2d1604-100.mount >

snap-gnome\x2d3\x2d26\x2d1604-98.mount >

snap-gnome\x2d3\x2d28\x2d1804-128.mount >

snap-gnome\x2d3\x2d28\x2d1804-145.mount >

snap-gnome\x2d3\x2d34\x2d1804-60.mount >

snap-gnome\x2d3\x2d34\x2d1804-66.mount >

snap-gnome\x2dsystem\x2dmonitor-145.mount >

snap-gnome\x2dsystem\x2dmonitor-148.mount >

snap-gtk\x2dcommon\x2dthemes-1513.mount >

snap-gtk\x2dcommon\x2dthemes-1514.mount >

snap-ike\x2dqt-7.mount >

snap-lxd-18884.mount >

snap-lxd-19009.mount >

snap-snap\x2dstore-498.mount >

snap-snap\x2dstore-518.mount >

sys-fs-fuse-connections.mount >

sys-kernel-config.mount >

sys-kernel-debug-tracing.mount >

sys-kernel-debug.mount >

sys-kernel-tracing.mount >

var-lib-lxcfs.mount >

acpid.path >

apport-autoreport.path >

cups.path >

resolvconf-pull-resolved.path >

systemd-ask-password-plymouth.path >

systemd-ask-password-wall.path >

init.scope >

session-2.scope >

accounts-daemon.service >

acpid.service >

alsa-restore.service >

apache2.service >

apparmor.service >

apport.service >

avahi-daemon.service >

binfmt-support.service >

blk-availability.service >

bluetooth.service >

collectd.service >

colord.service >

console-setup.service >

cron.service >

cups-browsed.service >

cups.service >

dbus.service >

fwupd.service >

gdm.service >

grub-common.service >

hddtemp.service >

ifupdown-pre.service >

irqbalance.service >

kerneloops.service >

keyboard-setup.service >

kmod-static-nodes.service >

lm-sensors.service >

lvm2-monitor.service >

lxc-net.service >

lxc.service >

lxcfs.service >

ModemManager.service >

networkd-dispatcher.service >

networking.service >

● NetworkManager-wait-online.service >

NetworkManager.service >

openvpn.service >

packagekit.service >

plymouth-quit-wait.service >

plymouth-read-write.service >

plymouth-start.service >

polkit.service >

resolvconf.service >

rsyslog.service >

rtkit-daemon.service >

setvtrgb.service >

● snap.ike-qt.iked.service >

snapd.apparmor.service >

snapd.seeded.service >

snapd.service >

● sssd.service >

● strongswan-starter.service >

switcheroo-control.service >

systemd-backlight@backlight:intel_backlight.service >

systemd-fsck@dev-disk-by\x2duuid-5319\x2dC9E9.service >

systemd-journal-flush.service >

systemd-journald.service >

systemd-logind.service >

systemd-modules-load.service >

systemd-random-seed.service >

systemd-remount-fs.service >

systemd-resolved.service >

systemd-sysctl.service >

systemd-sysusers.service >

systemd-timesyncd.service >

systemd-tmpfiles-setup-dev.service >

systemd-tmpfiles-setup.service >

systemd-udev-trigger.service >

systemd-udevd.service >

systemd-update-utmp.service >

systemd-user-sessions.service >

thermald.service >

udisks2.service >

ufw.service >

unattended-upgrades.service >

upower.service >

● ureadahead.service >

user-runtime-dir@1000.service >

user@1000.service >

● virtualbox.service >

vpn-unlimited-daemon.service >

whoopsie.service >

wpa_supplicant.service >

-.slice >

system-getty.slice >

system-modprobe.slice >

system-systemd\x2dbacklight.slice >

system-systemd\x2dfsck.slice >

system.slice >

user-1000.slice >

user.slice >

acpid.socket >

avahi-daemon.socket >

cups.socket >

dbus.socket >

dm-event.socket >

lvm2-lvmpolld.socket >

snap.lxd.daemon.unix.socket >

snapd.socket >

syslog.socket >

systemd-fsckd.socket >

systemd-initctl.socket >

systemd-journald-audit.socket >

systemd-journald-dev-log.socket >

systemd-journald.socket >

systemd-rfkill.socket >

systemd-udevd-control.socket >

systemd-udevd-kernel.socket >

uuidd.socket >

dev-disk-by\x2duuid-5e07c03e\x2da36c\x2d4420\x2d80ee\x2d77f6daecb6b4.swap >

basic.target >

bluetooth.target >

cryptsetup.target >

getty.target >

graphical.target >

local-fs-pre.target >

local-fs.target >

multi-user.target >

network-online.target >

network-pre.target >

network.target >

nss-lookup.target >

nss-user-lookup.target >

paths.target >

remote-fs.target >

slices.target >

sockets.target >

sound.target >

swap.target >

sysinit.target >

time-set.target >

time-sync.target >

timers.target >

anacron.timer >

apt-daily-upgrade.timer >

apt-daily.timer >

e2scrub_all.timer >

fstrim.timer >

fwupd-refresh.timer >

logrotate.timer >

man-db.timer >

motd-news.timer >

systemd-tmpfiles-clean.timer >

update-notifier-download.timer >

update-notifier-motd.timer >

ureadahead-stop.timer >

LOAD = Reflects whether the unit definition was properly loaded.

ACTIVE = The high-level unit activation state, i.e. generalization of SUB.

SUB = The low-level unit activation state, values depend on unit type.

269 loaded units listed. Pass --all to see loaded but inactive units, too.

To show all installed unit files use 'systemctl list-unit-files'.

To display detailed information about a certain unit and its part

of the cgroups hierarchy, execute systemctl

status as follows for the lxc service (since it

is present on our system):

$ systemctl status lxc.service

● lxc.service - LXC Container Initialization and Autoboot Code

Loaded: loaded (/lib/systemd/system/lxc.service; enabled; vendor preset: enabled)

Active: active (exited) since Wed 2021-01-20 11:25:34 CET; 1 weeks 0 days ago

Docs: man:lxc-autostart

man:lxc

Main PID: 1764 (code=exited, status=0/SUCCESS)

Tasks: 0 (limit: 4506)

Memory: 0B

CGroup: /system.slice/lxc.service

janv. 28 10:12:04 ordinateur-agathe systemd[1]: /lib/systemd/system/lxc.service:17: Standard output type sy>

janv. 28 10:12:04 ordinateur-agathe systemd[1]: /lib/systemd/system/lxc.service:18: Standard output type sy>

janv. 28 10:13:03 ordinateur-agathe systemd[1]: /lib/systemd/system/lxc.service:17: Standard output type sy>

janv. 28 10:13:03 ordinateur-agathe systemd[1]: /lib/systemd/system/lxc.service:18: Standard output type sy>

janv. 28 10:13:05 ordinateur-agathe systemd[1]: /lib/systemd/system/lxc.service:17: Standard output type sy>

janv. 28 10:13:05 ordinateur-agathe systemd[1]: /lib/systemd/system/lxc.service:18: Standard output type sy>

janv. 28 10:13:06 ordinateur-agathe systemd[1]: /lib/systemd/system/lxc.service:17: Standard output type sy>

janv. 28 10:13:06 ordinateur-agathe systemd[1]: /lib/systemd/system/lxc.service:18: Standard output type sy>

janv. 28 10:13:47 ordinateur-agathe systemd[1]: /lib/systemd/system/lxc.service:17: Standard output type sy>

janv. 28 10:13:47 ordinateur-agathe systemd[1]: /lib/systemd/system/lxc.service:18: Standard output type sy>

agathe@ordinateur-agathe:/sys/fs/cgroup/freezer/user/agathe/0/lxc.monitor.Alpine$

We can also explore the current directory in search of .stat files:

agathe@ordinateur-agathe:/sys/fs/cgroup$ find . -iname "*.stat" | grep -i alpine

./memory/user.slice/user-1000.slice/user@1000.service/app.slice/app-org.gnome.Terminal.slice/vte-spawn-84af319a-29f5-453f-be5f-9dd7b90a3ac1.scope/lxc.payload.Alpine/memory.stat

./memory/user.slice/user-1000.slice/user@1000.service/app.slice/app-org.gnome.Terminal.slice/vte-spawn-84af319a-29f5-453f-be5f-9dd7b90a3ac1.scope/lxc.monitor.Alpine/memory.stat

./unified/user.slice/user-1000.slice/user@1000.service/app.slice/app-org.gnome.Terminal.slice/vte-spawn-84af319a-29f5-453f-be5f-9dd7b90a3ac1.scope/lxc.payload.Alpine/cpu.stat

./unified/user.slice/user-1000.slice/user@1000.service/app.slice/app-org.gnome.Terminal.slice/vte-spawn-84af319a-29f5-453f-be5f-9dd7b90a3ac1.scope/lxc.payload.Alpine/cgroup.stat

./unified/user.slice/user-1000.slice/user@1000.service/app.slice/app-org.gnome.Terminal.slice/vte-spawn-84af319a-29f5-453f-be5f-9dd7b90a3ac1.scope/lxc.monitor.Alpine/cpu.stat

./unified/user.slice/user-1000.slice/user@1000.service/app.slice/app-org.gnome.Terminal.slice/vte-spawn-84af319a-29f5-453f-be5f-9dd7b90a3ac1.scope/lxc.monitor.Alpine/cgroup.stat

agathe@ordinateur-agathe:/sys/fs/cgroup$ cat ./unified/user.slice/user-1000.slice/user@1000.service/app.slice/app-org.gnome.Terminal.slice/vte-spawn-84af319a-29f5-453f-be5f-9dd7b90a3ac1.scope/lxc.payload.Alpine/cpu.stat

usage_usec 10814107

user_usec 3196571

system_usec 7617536

agathe@ordinateur-agathe:/sys/fs/cgroup$ cat ./unified/user.slice/user-1000.slice/user@1000.service/app.slice/app-org.gnome.Terminal.slice/vte-spawn-84af319a-29f5-453f-be5f-9dd7b90a3ac1.scope/lxc.monitor.Alpine/cpu.stat

usage_usec 60402

user_usec 11325

system_usec 49076

The bottom of this execution trace indicates statistics related to

CPU usages. lxc.monitor.Alpine directory is where the

Alpine container manager lives and lxc.payload.Alpine

directory where the Alpine container lives. Such directories exist to

adhere to cgroup-v2 delegation requirements.

LXC 4.0 now fully supports the unified cgroup hierarchy. For this

to work the whole cgroup driver had to be rewritten. A consequence of

this work is that the cgroup layout for LXC containers had to be

changed. Older versions of LXC used the layout

/sys/fs/cgroup/<controller>/<container-name>/. For

example, in the legacy cgroup hierarchy the cpuset hierarchy would

place the container's init process

into /sys/fs/cgroup/cpuset/c1/. The supervising monitor

process would stay in /sys/fs/cgroup/cpuset/. LXC 4.0 uses

the

layout /sys/fs/cgroup/<controller>/lxc.payload.<container-name>/.

For the cpuset controller in the legacy cgroup hierarchy for the

container f2 the cgroup would be

/sys/fs/cgroup/cpuset/lxc.payload.f2/. The monitor process now moves

into a separate cgroup as well

/sys/fs/cgroup/<controller>/lxc.monitor.<container-name>/. For

our example this would be /sys/fs/cgroup/cpuset/lxc.monitor.f2/.

Important: we notice that with our Ubuntu Desktop Edition

the hierarchy is more complex. We observe a hierarchy that

includes app-org.gnome.Terminal.slice

sub-directories. Indeed we need an Ubuntu Server Edition, without any

graphical interface to get the most simple view of the hierarchy.

The restrictions enforced by the unified cgroup hierarchy also

mean, that in order to start fully unprivileged containers cooperation

is needed on distributions that make use of an init system which

manages cgroups. This applies to all distributions that use systemd as

their init system. When a container is started from the shell via

lxc-start or other means one either needs to be root to

allow LXC to escape to the root cgroup or the init system needs to be

instructed to delegate an empty cgroup. In such scenarios it is wise

to set the configuration key lxc.cgroup.relative to 1 to

prevent LXC from escaping to the root cgroup.

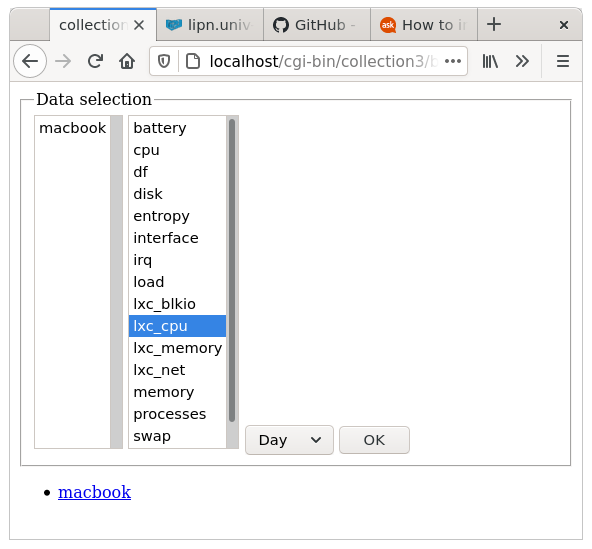

As noticed, Kernel 5.8 has a new file system hierarchy compared to Kernel 4.x, especially for containers stuffs. All the information that follows has been done in the Kernel 5.8 and VirtualBox 6.1 contexts:

cerin@macbook:~$ uname -a

Linux macbook 5.8.0-41-generic #46-Ubuntu SMP Mon Jan 18 16:48:44 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

cerin@macbook:~$ lsb_release -a

No LSB modules are available.

Distributor ID:Ubuntu

Description:Ubuntu 20.10

Release:20.10

Codename:groovy

cerin@macbook:~$ cat /proc/cpuinfo

processor: 0

vendor_id: GenuineIntel

cpu family: 6

model: 61

model name: Intel(R) Core(TM) i5-5287U CPU @ 2.90GHz

stepping: 4

cpu MHz: 2900.000

cache size: 3072 KB

physical id: 0

siblings: 2

core id: 0

cpu cores: 2

apicid: 0

initial apicid: 0

fpu: yes

fpu_exception: yes

cpuid level: 20

wp: yes

flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc cpuid tsc_known_freq pni pclmulqdq ssse3 cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx rdrand hypervisor lahf_lm abm 3dnowprefetch invpcid_single pti fsgsbase avx2 invpcid rdseed md_clear flush_l1d

bugs: cpu_meltdown spectre_v1 spectre_v2 spec_store_bypass l1tf mds swapgs itlb_multihit srbds

bogomips: 5800.00

clflush size: 64

cache_alignment: 64

address sizes: 39 bits physical, 48 bits virtual

power management:

processor: 1

vendor_id: GenuineIntel

cpu family: 6

model: 61

model name: Intel(R) Core(TM) i5-5287U CPU @ 2.90GHz

stepping: 4

cpu MHz: 2900.000

cache size: 3072 KB

physical id: 0

siblings: 2

core id: 1

cpu cores: 2

apicid: 1

initial apicid: 1

fpu: yes

fpu_exception: yes

cpuid level: 20

wp: yes

flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc cpuid tsc_known_freq pni pclmulqdq ssse3 cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx rdrand hypervisor lahf_lm abm 3dnowprefetch invpcid_single pti fsgsbase avx2 invpcid rdseed md_clear flush_l1d

bugs: cpu_meltdown spectre_v1 spectre_v2 spec_store_bypass l1tf mds swapgs itlb_multihit srbds

bogomips: 5800.00

clflush size: 64

cache_alignment: 64

address sizes: 39 bits physical, 48 bits virtual

power management:

cerin@macbook:~$

First of all, we propose a Python code to play with the monitoring

of containers. For running the code, you need to create containers

with sudo as follows sudo lxc-create -t download

-n Alpine1 and sudo lxc-create -t download -n

Alpine2. You also need to install the nsenter

Python module like this: sudo pip3 install nsenter

(sudo is important). Then you execute the following sequence of

instructions:

cerin@macbook:~/Desktop$ sudo lxc-ls -f

[sudo] password for cerin:

NAME STATE AUTOSTART GROUPS IPV4 IPV6 UNPRIVILEGED

Alpine1 STOPPED 0 - - - false

Alpine2 STOPPED 0 - - - false

cerin@macbook:~/Desktop$ sudo lxc-start -n Alpine1

cerin@macbook:~/Desktop$ sudo lxc-start -n Alpine2

cerin@macbook:~/Desktop$ sudo python3 collectd_lxc_kernel_5.8.py

root_lxc_cgroup: ['/sys/fs/cgroup/pids/lxc.payload.Alpine2/', '/sys/fs/cgroup/pids/lxc.payload.Alpine1/', '/sys/fs/cgroup/freezer/lxc.payload.Alpine2/', '/sys/fs/cgroup/freezer/lxc.payload.Alpine1/', '/sys/fs/cgroup/hugetlb/lxc.payload.Alpine2/', '/sys/fs/cgroup/hugetlb/lxc.payload.Alpine1/', '/sys/fs/cgroup/devices/lxc.payload.Alpine2/', '/sys/fs/cgroup/devices/lxc.payload.Alpine1/', '/sys/fs/cgroup/memory/lxc.payload.Alpine2/', '/sys/fs/cgroup/memory/lxc.payload.Alpine1/', '/sys/fs/cgroup/cpuset/lxc.payload.Alpine2/', '/sys/fs/cgroup/cpuset/lxc.payload.Alpine1/', '/sys/fs/cgroup/perf_event/lxc.payload.Alpine2/', '/sys/fs/cgroup/perf_event/lxc.payload.Alpine1/', '/sys/fs/cgroup/cpuacct/lxc.payload.Alpine2/', '/sys/fs/cgroup/cpuacct/lxc.payload.Alpine1/', '/sys/fs/cgroup/cpu/lxc.payload.Alpine2/', '/sys/fs/cgroup/cpu/lxc.payload.Alpine1/', '/sys/fs/cgroup/cpu,cpuacct/lxc.payload.Alpine2/', '/sys/fs/cgroup/cpu,cpuacct/lxc.payload.Alpine1/', '/sys/fs/cgroup/blkio/lxc.payload.Alpine2/', '/sys/fs/cgroup/blkio/lxc.payload.Alpine1/', '/sys/fs/cgroup/rdma/lxc.payload.Alpine2/', '/sys/fs/cgroup/rdma/lxc.payload.Alpine1/', '/sys/fs/cgroup/net_prio/lxc.payload.Alpine2/', '/sys/fs/cgroup/net_prio/lxc.payload.Alpine1/', '/sys/fs/cgroup/net_cls/lxc.payload.Alpine2/', '/sys/fs/cgroup/net_cls/lxc.payload.Alpine1/', '/sys/fs/cgroup/net_cls,net_prio/lxc.payload.Alpine2/', '/sys/fs/cgroup/net_cls,net_prio/lxc.payload.Alpine1/', '/sys/fs/cgroup/systemd/lxc.payload.Alpine2/', '/sys/fs/cgroup/systemd/lxc.payload.Alpine1/', '/sys/fs/cgroup/unified/lxc.payload.Alpine2/', '/sys/fs/cgroup/unified/lxc.payload.Alpine1/']

unprivilege_lxc_cgroup: []

{0: {'Alpine2': {'pids': '/sys/fs/cgroup/pids/lxc.payload.Alpine2/', 'freezer': '/sys/fs/cgroup/freezer/lxc.payload.Alpine2/', 'hugetlb': '/sys/fs/cgroup/hugetlb/lxc.payload.Alpine2/', 'devices': '/sys/fs/cgroup/devices/lxc.payload.Alpine2/', 'memory': '/sys/fs/cgroup/memory/lxc.payload.Alpine2/', 'cpuset': '/sys/fs/cgroup/cpuset/lxc.payload.Alpine2/', 'perf_event': '/sys/fs/cgroup/perf_event/lxc.payload.Alpine2/', 'cpuacct': '/sys/fs/cgroup/cpuacct/lxc.payload.Alpine2/', 'cpu': '/sys/fs/cgroup/cpu/lxc.payload.Alpine2/', 'cpu,cpuacct': '/sys/fs/cgroup/cpu,cpuacct/lxc.payload.Alpine2/', 'blkio': '/sys/fs/cgroup/blkio/lxc.payload.Alpine2/', 'rdma': '/sys/fs/cgroup/rdma/lxc.payload.Alpine2/', 'net_prio': '/sys/fs/cgroup/net_prio/lxc.payload.Alpine2/', 'net_cls': '/sys/fs/cgroup/net_cls/lxc.payload.Alpine2/', 'net_cls,net_prio': '/sys/fs/cgroup/net_cls,net_prio/lxc.payload.Alpine2/', 'systemd': '/sys/fs/cgroup/systemd/lxc.payload.Alpine2/', 'unified': '/sys/fs/cgroup/unified/lxc.payload.Alpine2/'}, 'Alpine1': {'pids': '/sys/fs/cgroup/pids/lxc.payload.Alpine1/', 'freezer': '/sys/fs/cgroup/freezer/lxc.payload.Alpine1/', 'hugetlb': '/sys/fs/cgroup/hugetlb/lxc.payload.Alpine1/', 'devices': '/sys/fs/cgroup/devices/lxc.payload.Alpine1/', 'memory': '/sys/fs/cgroup/memory/lxc.payload.Alpine1/', 'cpuset': '/sys/fs/cgroup/cpuset/lxc.payload.Alpine1/', 'perf_event': '/sys/fs/cgroup/perf_event/lxc.payload.Alpine1/', 'cpuacct': '/sys/fs/cgroup/cpuacct/lxc.payload.Alpine1/', 'cpu': '/sys/fs/cgroup/cpu/lxc.payload.Alpine1/', 'cpu,cpuacct': '/sys/fs/cgroup/cpu,cpuacct/lxc.payload.Alpine1/', 'blkio': '/sys/fs/cgroup/blkio/lxc.payload.Alpine1/', 'rdma': '/sys/fs/cgroup/rdma/lxc.payload.Alpine1/', 'net_prio': '/sys/fs/cgroup/net_prio/lxc.payload.Alpine1/', 'net_cls': '/sys/fs/cgroup/net_cls/lxc.payload.Alpine1/', 'net_cls,net_prio': '/sys/fs/cgroup/net_cls,net_prio/lxc.payload.Alpine1/', 'systemd': '/sys/fs/cgroup/systemd/lxc.payload.Alpine1/', 'unified': '/sys/fs/cgroup/unified/lxc.payload.Alpine1/'}}}

Container_PID: 2727

['Inter-| Receive | Transmit', ' face |bytes packets errs drop fifo frame compressed multicast|bytes packets errs drop fifo colls carrier compressed', ' lo: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0', ' eth0: 4702 32 0 0 0 0 0 0 1725 12 0 0 0 0 0 0', '']

container_name: Alpine2 rx_bytes: 4702

container_name: Alpine2 rx_data: ['4702', '32', '0', '0', '0', '0', '0']

container_name: Alpine2 rx_packets: 32

container_name: Alpine2 tx_packets: 12

container_name: Alpine2 rx_errors: 0

container_name: Alpine2 tx_errors: 0

container_name: Alpine2 mem_rss: 405504

container_name: Alpine2 mem_cache: 2433024

container_name: Alpine2 mem_swap: 0

container_name: Alpine2 cpu_user: 18

container_name: Alpine2 cpu_system: 44

container_name: Alpine2 bytes_read: 2560000

container_name: Alpine2 bytes_read: 4096

container_name: Alpine2 ops_read: 186

container_name: Alpine2 ops_write: 1

Container_PID: 2370

['Inter-| Receive | Transmit', ' face |bytes packets errs drop fifo frame compressed multicast|bytes packets errs drop fifo colls carrier compressed', ' lo: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0', ' eth0: 8321 58 0 0 0 0 0 0 1725 12 0 0 0 0 0 0', '']

container_name: Alpine1 rx_bytes: 8321

container_name: Alpine1 rx_data: ['8321', '58', '0', '0', '0', '0', '0']

container_name: Alpine1 rx_packets: 58

container_name: Alpine1 tx_packets: 12

container_name: Alpine1 rx_errors: 0

container_name: Alpine1 tx_errors: 0

container_name: Alpine1 mem_rss: 405504

container_name: Alpine1 mem_cache: 2568192

container_name: Alpine1 mem_swap: 0

container_name: Alpine1 cpu_user: 18

container_name: Alpine1 cpu_system: 40

container_name: Alpine1 bytes_read: 2560000

container_name: Alpine1 bytes_read: 4096

container_name: Alpine1 ops_read: 186

container_name: Alpine1 ops_write: 1

cerin@macbook:~/Desktop$

The Python code to explore and to moninor the two containers

(Alpine1 and Alpine2) first starts to gather information about the

paths related to the lxc metrics. Then it gathers information about

the containers names. At least, it shows information related to the

cpu usage, the memory usage, the disk usage and the network usage of

each

container. The collectd_lxc_kernel_5.8.py

code is as follows and it works only for containers created through a

sudo lxc-create ..., as pointed out previously:

#

# From an initial idea available online at:

# https://github.com/aarnaud/collectd-lxc

#

# christophe.cerin@univ-paris13.fr

# February, 2021

#

import glob

import os

import re

import subprocess

from nsenter import Namespace

def configer(ObjConfiguration):

collectd.info('Configuring lxc collectd')

def initer():

collectd.info('initing lxc collectd')

def reader(input_data=None):

root_lxc_cgroup = glob.glob("/sys/fs/cgroup/*/lxc.payload.*/")

unprivilege_lxc_cgroup = glob.glob("/sys/fs/cgroup/*/*/*/*/lxc.payload.*/")

cgroup_lxc = root_lxc_cgroup + unprivilege_lxc_cgroup

print("root_lxc_cgroup: "+str(root_lxc_cgroup))

print("unprivilege_lxc_cgroup: "+str(unprivilege_lxc_cgroup))

metrics = dict()

#Get all stats by container group, by user root

for cgroup_lxc_metrics in cgroup_lxc:

m = re.search("/sys/fs/cgroup/(?P<type>[a-zA-Z_,]+)/lxc.payload.(?P<container_name>.*)/", cgroup_lxc_metrics)

user_id = 0 #int(m.group("user_id") or 0)

stat_type = m.group("type")

container_name = m.group("container_name")

if user_id not in metrics:

metrics[user_id] = dict()

if container_name not in metrics[user_id]:

metrics[user_id][container_name] = dict()

metrics[user_id][container_name][stat_type] = cgroup_lxc_metrics

print(metrics)

# foreach user

for user_id in metrics:

# foreach container

for container_name in metrics[user_id]:

lxc_fullname = "{0}__{1}".format(user_id, container_name)

for metric in metrics[user_id][container_name]:

### Memory

if metric == "memory":

with open(os.path.join(metrics[user_id][container_name][metric], 'memory.stat'), 'r') as f:

lines = f.read().splitlines()

mem_rss = 0

mem_cache = 0

mem_swap = 0

for line in lines:

data = line.split()

if data[0] == "total_rss":

mem_rss = int(data[1])

elif data[0] == "total_cache":

mem_cache = int(data[1])

elif data[0] == "total_swap":

mem_swap = int(data[1])

print("container_name: "+container_name+" mem_rss: "+str(mem_rss))

print("container_name: "+container_name+" mem_cache: "+str(mem_cache))

print("container_name: "+container_name+" mem_swap: "+str(mem_swap))

"""

values = collectd.Values(plugin_instance=lxc_fullname,

type="gauge", plugin="lxc_memory")

values.dispatch(type_instance="rss", values=[mem_rss])

values.dispatch(type_instance="cache", values=[mem_cache])

values.dispatch(type_instance="swap", values=[mem_swap])

"""

### End Memory

### CPU

if metric == "cpuacct":

with open(os.path.join(metrics[user_id][container_name][metric], 'cpuacct.stat'), 'r') as f:

lines = f.read().splitlines()

cpu_user = 0

cpu_system = 0

for line in lines:

data = line.split()

if data[0] == "user":

cpu_user = int(data[1])

elif data[0] == "system":

cpu_system = int(data[1])

print("container_name: "+container_name+" cpu_user: "+str(cpu_user))

print("container_name: "+container_name+" cpu_system: "+str(cpu_system))

"""

values = collectd.Values(plugin_instance=lxc_fullname,

type="gauge", plugin="lxc_cpu")

values.dispatch(type_instance="user", values=[cpu_user])

values.dispatch(type_instance="system", values=[cpu_system])

"""

### End CPU

### DISK

if metric == "blkio":

with open(os.path.join(metrics[user_id][container_name][metric], 'blkio.throttle.io_service_bytes'), 'r') as f:

lines = f.read()

bytes_read = int(re.search("Read\s+(?P<read>[0-9]+)", lines).group("read"))

bytes_write = int(re.search("Write\s+(?P<write>[0-9]+)", lines).group("write"))

with open(os.path.join(metrics[user_id][container_name][metric], 'blkio.throttle.io_serviced'), 'r') as f:

lines = f.read()

ops_read = int(re.search("Read\s+(?P<read>[0-9]+)", lines).group("read"))

ops_write = int(re.search("Write\s+(?P<write>[0-9]+)", lines).group("write"))

print("container_name: "+container_name+" bytes_read: "+str(bytes_read))

print("container_name: "+container_name+" bytes_read: "+str(bytes_write))

print("container_name: "+container_name+" ops_read: "+str(ops_read))

print("container_name: "+container_name+" ops_write: "+str(ops_write))

"""

values = collectd.Values(plugin_instance=lxc_fullname,

type="gauge", plugin="lxc_blkio")

values.dispatch(type_instance="bytes_read", values=[bytes_read])

values.dispatch(type_instance="bytes_write", values=[bytes_write])

values.dispatch(type_instance="ops_read", values=[ops_read])

values.dispatch(type_instance="ops_write", values=[ops_write])

"""

### End DISK

### Network

if metric == "pids":

with open(os.path.join(metrics[user_id][container_name][metric], 'tasks'), 'r') as f:

# The first line is PID of container

container_PID = f.readline().rstrip()

print("Container_PID: "+str(container_PID))

with Namespace(container_PID, 'net'):

# To read network metric in namespace, "open" method don't work with namespace

network_data = subprocess.check_output(['cat', '/proc/net/dev']).decode().split("\n")

# HEAD OF /proc/net/dev :

# Inter-|Receive |Transmit

# face |bytes packets errs drop fifo frame compressed multicast|bytes packets errs drop fifo colls carrier compressed

print(network_data)

for line in network_data[2:]:

if line.strip() == "":

continue

interface = line.strip().split(':')[0]

rx_data = line.strip().split(':')[1].split()[0:7]

tx_data = line.strip().split(':')[1].split()[8:15]

rx_bytes = int(rx_data[0])

tx_bytes = int(tx_data[0])

rx_packets = int(rx_data[1])

tx_packets = int(tx_data[1])

rx_errors = int(rx_data[2])

tx_errors = int(tx_data[2])

print("container_name: "+container_name+" rx_bytes: "+str(rx_bytes))

print("container_name: "+container_name+" rx_data: "+str(rx_data))

print("container_name: "+container_name+" rx_packets: "+str(rx_packets))

print("container_name: "+container_name+" tx_packets: "+str(tx_packets))

print("container_name: "+container_name+" rx_errors: "+str(rx_errors))

print("container_name: "+container_name+" tx_errors: "+str(tx_errors))

"""

values = collectd.Values(plugin_instance=lxc_fullname,

type="gauge", plugin="lxc_net")

values.dispatch(type_instance="tx_bytes_{0}".format(interface), values=[tx_bytes])

values.dispatch(type_instance="rx_bytes_{0}".format(interface), values=[rx_bytes])

values.dispatch(type_instance="tx_packets_{0}".format(interface), values=[tx_packets])

values.dispatch(type_instance="rx_packets_{0}".format(interface), values=[rx_packets])

values.dispatch(type_instance="tx_errors_{0}".format(interface), values=[tx_errors])

values.dispatch(type_instance="rx_errors_{0}".format(interface), values=[rx_errors])

"""

### End Network

if __name__ == '__main__':

# Mimic Collectd Values object

class Values(object):

def __init__(self, **kwargs):

self.__dict__["_values"] = kwargs

def __setattr__(self, key, value):

self.__dict__["_values"][key] = value