This lecture introduces the concept of Data Life Cycle management (DLM) through the ActiveData and GraalVM ecosystems. Data life cycle management is a policy-based approach to managing the flow of an information system's data throughout its life cycle: from creation and initial storage to the time when it becomes obsolete and is deleted.

Imagine that a piece of data is captured from a sensor and entered into a database. The new data will either be accessed for reporting, analytics or some other use. Or, it will sit in the database and eventually become obsolete. The data may also have logic and validations (curation, compression) applied to it. But at some time, it will come to the end of its life and be archived, purged, or both. Again the concept of defining and organizing this process into repeatable steps is known as Data Life Cycle Management.

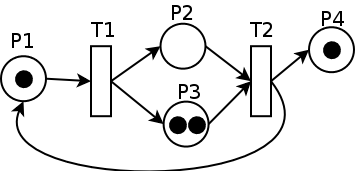

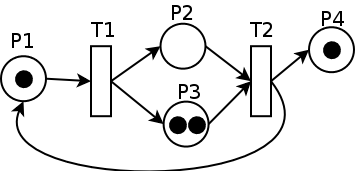

In this lesson, the concept of step is related to the Petri Nets concept. Here, Petri Nets constitute a modeling for the description of a system evolving over time, through the trigger of transitions. In short, a Petri net consists of places, transitions, and arcs. Arcs run from a place to a transition or vice versa, never between places or between transitions. The places from which an arc runs to a transition are called the input places of the transition; the places to which arcs run from a transition are called the output places of the transition.

A place may refer to a state of the system, for instance the state "Created", with the meaning that the data has been created. Transitions activate the move from one place to another one. In the tool we use, transitions are associated with handlers that implement a certain treatment on the physical data. For instance, the code or script for a given handler will delete the data into consideration.

In the diagram of a Petri net (see Figure 1 below), places are conventionally depicted with circles, transitions with long narrow rectangles and arcs as one-way arrows that show connections of places to transitions or transitions to places. If the diagram were of an elementary net, then those places in a configuration would be conventionally depicted as circles, where each circle encompasses a single dot called a token. In the given diagram of a Petri net (see below), the place circles may encompass more than one token to show the number of times a place appears in a configuration. The configuration of tokens distributed over an entire Petri net diagram is called a marking. Tokens serve to enable a transition. If an input place does not have any token, then this denotes the impossibility to fire any transition associated with the input place. Implicitly, when a transition is fired, the token moves from the input place to the output place. In the diagram (see below on Figure 1) the T1 transition, for instance, is fired because a token is located in place P1.

To alleviate the complexity of managing data life cycles, Simonet, Fedak and Ripeanu proposed Active Data, a programming model to automate and improve the expressiveness of data management applications. First, ActiveData is a formal model based on Petri Net. Second, the concept of the Active Data programming model allows code execution at each stage of the data life cycle. With Active Data, routines provided by programmers are executed when a set of events (creation, replication, transfer, deletion) happen to any data. Active Data borrows from Active Message the idea of executing user-provided code when certain events occur (message reception in the case of Active Message).Together with the Java implementation, the ActiveData framework illustrates the adequateness of the model to program applications which manage distributed and dynamic data.

The Active Data programming model and the execution environment is a Data-centric and Event-driven view that allows:

The Active Data runtime offers a client API that allows programmers to supply code to be executed when data transitions are triggered. The supplied code is called a handler. The usual course of events that leads to code execution is as follow:

The last two points are repeated as long a the handler subscription remains, every time the transition is triggered, on any data, on any node manipulating data. The above mentioned procedure should be refined to consider the applications development methodology.

The paradigm used by Active Data to propagate transitions is based on Publish/Subscribe. In the ActiveData implementation, every node can be a publisher and a subscriber at the same time. Clients (see terminal 3 in our next example) of the Active Data runtime publish transitions to a centralized service (see terminal 1 in our next example) called Active Data Service. Clients pull transition information (see terminal 2 in our next example) from the Active Data Service as well to run the handler associated to the transition.

As a result, the application developer needs to implement:

For more information related to ActiveData, complementary readings are the following:

In this lesson, we use GraalVM to implement handlers coded in different programming languages. The key idea is to demonstrate that, nowadays, applications can be developed with multiple programming languages in mind, through the sharing of variables or abstract data types. To learn more on the GraalVM polyglot virtual machine, please follow:

In Covid 19 times, our purpose is to allow efficient ventilation of room(s) in a building (professional offices, hospital services, retirement homes, nurseries, teaching premises - from kindergarten to University -, individual apartments,...). The ventilation must be carried out in good understanding with the different users, and fulfill the requirements of sanitary security and sober energy consumption. In this scenario, the data to be captured through sensors and actuators in the building could be the followings:

A simplified version of the data life cycle (DLC) of the CO2 example corresponds to the following steps. First, when we fire the 'location' transition then we generate a vector of integers, through a JavaScript script. Then we have 3 choices: either we fire the 'compress' or 'filter' of 'curation' transition. In case of 'curation', we keep a slice in the initial vector, through a Python script. Then, the 't3' transition must be fired. Then the 'compute' transition triggers the computation of the sum of values in the slice through a Ruby script. Then the 'heat_plus' or 'heat_moins' transition must be firedelow. Then, the 'store' transition must be fired to complete the DLC. The Petri Net is given below on Figure 2. One execution is also given, later on, and the 'location', 'curation', 't3', 'compute', 'heat_plus' and 'store' are successively fired. See the 'terminal 3' tab.

These steps illustrate that, in general, it is common use to apply throttling on the data before pushing them on a computing platform and further archiving them and the associated results. Throttling the data may consist in filtering, compressing and/or curating (i.e. looking for and deleting invalid data). Once the data throttling has been performed, we need to decide whether to open/close the windows in the room and ultimately increase or decrease the room temperature. This decision concludes the data life cycle.

The CO2 example, that we have implemented, also illustrates the coupling of ActiveData with GraalVM that implements handlers written in different programming languages. Here, we have JavaScript to produce the initial vector of integers, then Python to catch a slice from the vector, then Ruby to compute the sum of all values in the slice.

To play with the CO2 example, proceed as follows.

1- Compile with 3 ant instructions, according to the build.xml file for compatibility of ActiveData with GraalVM. The build.xml file is given at the end of this page. The GraalVM java and javac executables become the frontend for compiling ActiveData.

cerin@ordinateur-cerin:~/Bureau/ActiveData$ ant clean

Buildfile: /home/cerin/Bureau/ActiveData/build.xml

clean:

BUILD SUCCESSFUL

Total time: 0 seconds

cerin@ordinateur-cerin:~/Bureau/ActiveData$ ant

Buildfile: /home/cerin/Bureau/ActiveData/build.xml

init:

dependencies:

[get] Destination already exists (skipping): /home/cerin/Bureau/ActiveData/lib/junit-4.10.jar

[get] Destination already exists (skipping): /home/cerin/Bureau/ActiveData/lib/mail-1.4.5.jar

[get] Destination already exists (skipping): /home/cerin/Bureau/ActiveData/lib/twitter4j-core-4.0.7.jar

compile:

[javac] Compiling 73 source files to /home/cerin/Bureau/ActiveData/bin

[javac] Note: Some input files use or override a deprecated API.

[javac] Note: Recompile with -Xlint:deprecation for details.

BUILD SUCCESSFUL

Total time: 6 seconds

cerin@ordinateur-cerin:~/Bureau/ActiveData$ ant jar

Buildfile: /home/cerin/Bureau/ActiveData/build.xml

init:

dependencies:

[get] Destination already exists (skipping): /home/cerin/Bureau/ActiveData/lib/junit-4.10.jar

[get] Destination already exists (skipping): /home/cerin/Bureau/ActiveData/lib/mail-1.4.5.jar

[get] Destination already exists (skipping): /home/cerin/Bureau/ActiveData/lib/twitter4j-core-4.0.7.jar

compile:

jar:

[jar] Building jar: /home/cerin/Bureau/ActiveData/dist/active-data-lib-0.2.0.jar

BUILD SUCCESSFUL

.Total time: 1 second

cerin@ordinateur-cerin:~/Bureau/ActiveData$

2- Play

Note: for steps a), b) and c) pay attention to the location (directory) from where you run the commands. Notice that the java executable program we use is the Graalvm java executable. It is a requirement for our polyglot application.

Note: we get a verbose mode in terminal 1 to collect information related to the current state of the DLC. In particular, you can follow the place where the token is located. We also get information about the predecessor and successor places for each transition.

terminal 1

==========

cerin@ordinateur-cerin:~/Bureau/ActiveData$ ../graalvm-ce-java11-20.2.0/bin/java -jar dist/active-data-lib-0.2.0.jar

Active Data service running on rmi://127.0.1.1:1200/ActiveData

Life cycle places [Place Co2.p2]

Life cycle places [Place Co2.p3]

Life cycle places [Place Co2.p4]

Life cycle places [Place Co2.p5]

Life cycle places [Place Co2.p6]

Life cycle places [Place Co2.p7]

Life cycle places [Place Co2.p8]

Life cycle places [Place Co2.Created]

Life cycle places [Place Co2.Terminated]

Transition is defined by Key = Co2.compute -- Value = [Transition Co2.compute]

Token is: []

Predecessor place: [Place Co2.p6]

Successor place: [Place Co2.p7]

Transition is defined by Key = Co2.location -- Value = [Transition Co2.location]

Token is: [Token: {"Co2", "my_name", 1}]

Predecessor place: [Place Co2.Created]

Successor place: [Place Co2.p2]

Transition is defined by Key = Co2.filter -- Value = [Transition Co2.filter]

Token is: []

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p3]

Transition is defined by Key = Co2.Create -- Value = [Transition Co2.Create]

Successor place: [Place Co2.Created]

Transition is defined by Key = Co2.t1 -- Value = [Transition Co2.t1]

Token is: []

Predecessor place: [Place Co2.p3]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t2 -- Value = [Transition Co2.t2]

Token is: []

Predecessor place: [Place Co2.p4]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t3 -- Value = [Transition Co2.t3]

Token is: []

Predecessor place: [Place Co2.p5]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.heat_plus -- Value = [Transition Co2.heat_plus]

Token is: []

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.curation -- Value = [Transition Co2.curation]

Token is: []

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p5]

Transition is defined by Key = Co2.heat_moins -- Value = [Transition Co2.heat_moins]

Token is: []

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.store -- Value = [Transition Co2.store]

Token is: []

Predecessor place: [Place Co2.p8]

Successor place: [Place Co2.Terminated]

Transition is defined by Key = Co2.compress -- Value = [Transition Co2.compress]

Token is: []

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p4]

Consume token number 1 from place Co2.Created

ProduceNewToken() call => [Place Co2.p2]

Life cycle places [Place Co2.p2]

Life cycle places [Place Co2.p3]

Life cycle places [Place Co2.p4]

Life cycle places [Place Co2.p5]

Life cycle places [Place Co2.p6]

Life cycle places [Place Co2.p7]

Life cycle places [Place Co2.p8]

Life cycle places [Place Co2.Created]

Life cycle places [Place Co2.Terminated]

Transition is defined by Key = Co2.compute -- Value = [Transition Co2.compute]

Token is: []

Predecessor place: [Place Co2.p6]

Successor place: [Place Co2.p7]

Transition is defined by Key = Co2.location -- Value = [Transition Co2.location]

Token is: []

Predecessor place: [Place Co2.Created]

Successor place: [Place Co2.p2]

Transition is defined by Key = Co2.filter -- Value = [Transition Co2.filter]

Token is: [Token: {"Co2", "my_name", 1}, Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p3]

Transition is defined by Key = Co2.Create -- Value = [Transition Co2.Create]

Successor place: [Place Co2.Created]

Transition is defined by Key = Co2.t1 -- Value = [Transition Co2.t1]

Token is: []

Predecessor place: [Place Co2.p3]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t2 -- Value = [Transition Co2.t2]

Token is: []

Predecessor place: [Place Co2.p4]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t3 -- Value = [Transition Co2.t3]

Token is: []

Predecessor place: [Place Co2.p5]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.heat_plus -- Value = [Transition Co2.heat_plus]

Token is: []

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.curation -- Value = [Transition Co2.curation]

Token is: [Token: {"Co2", "my_name", 1}, Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p5]

Transition is defined by Key = Co2.heat_moins -- Value = [Transition Co2.heat_moins]

Token is: []

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.store -- Value = [Transition Co2.store]

Token is: []

Predecessor place: [Place Co2.p8]

Successor place: [Place Co2.Terminated]

Transition is defined by Key = Co2.compress -- Value = [Transition Co2.compress]

Token is: [Token: {"Co2", "my_name", 1}, Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p4]

Consume token number 1 from place Co2.p2

ProduceNewToken() call => [Place Co2.p5]

Life cycle places [Place Co2.p2]

Life cycle places [Place Co2.p3]

Life cycle places [Place Co2.p4]

Life cycle places [Place Co2.p5]

Life cycle places [Place Co2.p6]

Life cycle places [Place Co2.p7]

Life cycle places [Place Co2.p8]

Life cycle places [Place Co2.Created]

Life cycle places [Place Co2.Terminated]

Transition is defined by Key = Co2.compute -- Value = [Transition Co2.compute]

Token is: []

Predecessor place: [Place Co2.p6]

Successor place: [Place Co2.p7]

Transition is defined by Key = Co2.location -- Value = [Transition Co2.location]

Token is: []

Predecessor place: [Place Co2.Created]

Successor place: [Place Co2.p2]

Transition is defined by Key = Co2.filter -- Value = [Transition Co2.filter]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p3]

Transition is defined by Key = Co2.Create -- Value = [Transition Co2.Create]

Successor place: [Place Co2.Created]

Transition is defined by Key = Co2.t1 -- Value = [Transition Co2.t1]

Token is: []

Predecessor place: [Place Co2.p3]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t2 -- Value = [Transition Co2.t2]

Token is: []

Predecessor place: [Place Co2.p4]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t3 -- Value = [Transition Co2.t3]

Token is: [Token: {"Co2", "my_name", 1}, Token: {"Co2", "my_name", 5}]

Predecessor place: [Place Co2.p5]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.heat_plus -- Value = [Transition Co2.heat_plus]

Token is: []

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.curation -- Value = [Transition Co2.curation]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p5]

Transition is defined by Key = Co2.heat_moins -- Value = [Transition Co2.heat_moins]

Token is: []

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.store -- Value = [Transition Co2.store]

Token is: []

Predecessor place: [Place Co2.p8]

Successor place: [Place Co2.Terminated]

Transition is defined by Key = Co2.compress -- Value = [Transition Co2.compress]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p4]

Consume token number 1 from place Co2.p5

ProduceNewToken() call => [Place Co2.p6]

Life cycle places [Place Co2.p2]

Life cycle places [Place Co2.p3]

Life cycle places [Place Co2.p4]

Life cycle places [Place Co2.p5]

Life cycle places [Place Co2.p6]

Life cycle places [Place Co2.p7]

Life cycle places [Place Co2.p8]

Life cycle places [Place Co2.Created]

Life cycle places [Place Co2.Terminated]

Transition is defined by Key = Co2.compute -- Value = [Transition Co2.compute]

Token is: [Token: {"Co2", "my_name", 1}, Token: {"Co2", "my_name", 7}]

Predecessor place: [Place Co2.p6]

Successor place: [Place Co2.p7]

Transition is defined by Key = Co2.location -- Value = [Transition Co2.location]

Token is: []

Predecessor place: [Place Co2.Created]

Successor place: [Place Co2.p2]

Transition is defined by Key = Co2.filter -- Value = [Transition Co2.filter]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p3]

Transition is defined by Key = Co2.Create -- Value = [Transition Co2.Create]

Successor place: [Place Co2.Created]

Transition is defined by Key = Co2.t1 -- Value = [Transition Co2.t1]

Token is: []

Predecessor place: [Place Co2.p3]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t2 -- Value = [Transition Co2.t2]

Token is: []

Predecessor place: [Place Co2.p4]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t3 -- Value = [Transition Co2.t3]

Token is: [Token: {"Co2", "my_name", 5}]

Predecessor place: [Place Co2.p5]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.heat_plus -- Value = [Transition Co2.heat_plus]

Token is: []

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.curation -- Value = [Transition Co2.curation]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p5]

Transition is defined by Key = Co2.heat_moins -- Value = [Transition Co2.heat_moins]

Token is: []

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.store -- Value = [Transition Co2.store]

Token is: []

Predecessor place: [Place Co2.p8]

Successor place: [Place Co2.Terminated]

Transition is defined by Key = Co2.compress -- Value = [Transition Co2.compress]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p4]

Consume token number 1 from place Co2.p6

ProduceNewToken() call => [Place Co2.p7]

Life cycle places [Place Co2.p2]

Life cycle places [Place Co2.p3]

Life cycle places [Place Co2.p4]

Life cycle places [Place Co2.p5]

Life cycle places [Place Co2.p6]

Life cycle places [Place Co2.p7]

Life cycle places [Place Co2.p8]

Life cycle places [Place Co2.Created]

Life cycle places [Place Co2.Terminated]

Transition is defined by Key = Co2.compute -- Value = [Transition Co2.compute]

Token is: [Token: {"Co2", "my_name", 7}]

Predecessor place: [Place Co2.p6]

Successor place: [Place Co2.p7]

Transition is defined by Key = Co2.location -- Value = [Transition Co2.location]

Token is: []

Predecessor place: [Place Co2.Created]

Successor place: [Place Co2.p2]

Transition is defined by Key = Co2.filter -- Value = [Transition Co2.filter]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p3]

Transition is defined by Key = Co2.Create -- Value = [Transition Co2.Create]

Successor place: [Place Co2.Created]

Transition is defined by Key = Co2.t1 -- Value = [Transition Co2.t1]

Token is: []

Predecessor place: [Place Co2.p3]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t2 -- Value = [Transition Co2.t2]

Token is: []

Predecessor place: [Place Co2.p4]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t3 -- Value = [Transition Co2.t3]

Token is: [Token: {"Co2", "my_name", 5}]

Predecessor place: [Place Co2.p5]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.heat_plus -- Value = [Transition Co2.heat_plus]

Token is: [Token: {"Co2", "my_name", 1}, Token: {"Co2", "my_name", 9}]

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.curation -- Value = [Transition Co2.curation]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p5]

Transition is defined by Key = Co2.heat_moins -- Value = [Transition Co2.heat_moins]

Token is: [Token: {"Co2", "my_name", 1}, Token: {"Co2", "my_name", 9}]

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.store -- Value = [Transition Co2.store]

Token is: []

Predecessor place: [Place Co2.p8]

Successor place: [Place Co2.Terminated]

Transition is defined by Key = Co2.compress -- Value = [Transition Co2.compress]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p4]

Consume token number 1 from place Co2.p7

ProduceNewToken() call => [Place Co2.p8]

Life cycle places [Place Co2.p2]

Life cycle places [Place Co2.p3]

Life cycle places [Place Co2.p4]

Life cycle places [Place Co2.p5]

Life cycle places [Place Co2.p6]

Life cycle places [Place Co2.p7]

Life cycle places [Place Co2.p8]

Life cycle places [Place Co2.Created]

Life cycle places [Place Co2.Terminated]

Transition is defined by Key = Co2.compute -- Value = [Transition Co2.compute]

Token is: [Token: {"Co2", "my_name", 7}]

Predecessor place: [Place Co2.p6]

Successor place: [Place Co2.p7]

Transition is defined by Key = Co2.location -- Value = [Transition Co2.location]

Token is: []

Predecessor place: [Place Co2.Created]

Successor place: [Place Co2.p2]

Transition is defined by Key = Co2.filter -- Value = [Transition Co2.filter]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p3]

Transition is defined by Key = Co2.Create -- Value = [Transition Co2.Create]

Successor place: [Place Co2.Created]

Transition is defined by Key = Co2.t1 -- Value = [Transition Co2.t1]

Token is: []

Predecessor place: [Place Co2.p3]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t2 -- Value = [Transition Co2.t2]

Token is: []

Predecessor place: [Place Co2.p4]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.t3 -- Value = [Transition Co2.t3]

Token is: [Token: {"Co2", "my_name", 5}]

Predecessor place: [Place Co2.p5]

Successor place: [Place Co2.p6]

Transition is defined by Key = Co2.heat_plus -- Value = [Transition Co2.heat_plus]

Token is: [Token: {"Co2", "my_name", 9}]

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.curation -- Value = [Transition Co2.curation]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p5]

Transition is defined by Key = Co2.heat_moins -- Value = [Transition Co2.heat_moins]

Token is: [Token: {"Co2", "my_name", 9}]

Predecessor place: [Place Co2.p7]

Successor place: [Place Co2.p8]

Transition is defined by Key = Co2.store -- Value = [Transition Co2.store]

Token is: [Token: {"Co2", "my_name", 1}, Token: {"Co2", "my_name", 11}]

Predecessor place: [Place Co2.p8]

Successor place: [Place Co2.Terminated]

Transition is defined by Key = Co2.compress -- Value = [Transition Co2.compress]

Token is: [Token: {"Co2", "my_name", 3}]

Predecessor place: [Place Co2.p2]

Successor place: [Place Co2.p4]

Consume token number 1 from place Co2.p8

ProduceNewToken() call => [Place Co2.Terminated]

terminal 2 ========== cerin@ordinateur-cerin:~/Bureau/ActiveData/src$ ../../graalvm-ce-java11-20.2.0/bin/java -cp "../dist/active-data-lib-0.2.0.jar:../../graalvm-ce-java11-20.2.0/lib/graalvm/polyglot-native-api.jar" org.inria.activedata.examples.CO2.Co2Client Connecting to Active Data service... OK Subscribing to transition... OK...! Transition location Initial data set produced by a Javascript code: [0, 2, 4, 6, 8, 10, 12, 14, 16, 18] Transition curation Data set produced by a Python code: [2, 4, 6, 8, 10, 12] Transition t3 Transition compute Data set produced by a Ruby code is the sum: 42 Transition heat_plus Transition store

terminal 3 ========== cerin@ordinateur-cerin:~/Bureau/ActiveData$ cd src cerin@ordinateur-cerin:~/Bureau/ActiveData/src$ ../../graalvm-ce-java11-20.2.0/bin/java -cp ../dist/active-data-lib-0.2.0.jar org.inria.activedata.examples.cmdline.PublishTransition localhost -m org.inria.activedata.examples.CO2.Co2Model -t Co2.location -sid Co2 -uid my_name Using the default transition dealer cerin@ordinateur-cerin:~/Bureau/ActiveData/src$ ../../graalvm-ce-java11-20.2.0/bin/java -cp ../dist/active-data-lib-0.2.0.jar org.inria.activedata.examples.cmdline.PublishTransition localhost -m org.inria.activedata.examples.CO2.Co2Model -t Co2.curation -sid Co2 -uid my_name Using the default transition dealer cerin@ordinateur-cerin:~/Bureau/ActiveData/src$ ../../graalvm-ce-java11-20.2.0/bin/java -cp ../dist/active-data-lib-0.2.0.jar org.inria.activedata.examples.cmdline.PublishTransition localhost -m org.inria.activedata.examples.CO2.Co2Model -t Co2.t3 -sid Co2 -uid my_name Using the default transition dealer cerin@ordinateur-cerin:~/Bureau/ActiveData/src$ ../../graalvm-ce-java11-20.2.0/bin/java -cp ../dist/active-data-lib-0.2.0.jar org.inria.activedata.examples.cmdline.PublishTransition localhost -m org.inria.activedata.examples.CO2.Co2Model -t Co2.compute -sid Co2 -uid my_name Using the default transition dealer cerin@ordinateur-cerin:~/Bureau/ActiveData/src$ ../../graalvm-ce-java11-20.2.0/bin/java -cp ../dist/active-data-lib-0.2.0.jar org.inria.activedata.examples.cmdline.PublishTransition localhost -m org.inria.activedata.examples.CO2.Co2Model -t Co2.heat_plus -sid Co2 -uid my_name Using the default transition dealer cerin@ordinateur-cerin:~/Bureau/ActiveData/src$ ../../graalvm-ce-java11-20.2.0/bin/java -cp ../dist/active-data-lib-0.2.0.jar org.inria.activedata.examples.cmdline.PublishTransition localhost -m org.inria.activedata.examples.CO2.Co2Model -t Co2.store -sid Co2 -uid my_name Using the default transition dealer cerin@ordinateur-cerin:~/Bureau/ActiveData/src$

The CO2 example is organized along three files. We also list the code for a verbose mode for the handler dealer as well as the build.xml file to compile ActiveData source files with GraalVM frontend..

File Co2Model.java:

package org.inria.activedata.examples.CO2;

import org.inria.activedata.model.LifeCycleModel;

import org.inria.activedata.model.Place;

import org.inria.activedata.model.Transition;

public class Co2Model extends LifeCycleModel {

private static final long serialVersionUID = 6325323393042152760L;

public Co2Model() {

super("Co2");

Place p1 = getStartPlace();

Place p9 = getEndPlace();

Place p2 = addPlace("p2");

Place p3 = addPlace("p3");

Place p4 = addPlace("p4");

Place p5 = addPlace("p5");

Place p6 = addPlace("p6");

Place p7 = addPlace("p7");

Place p8 = addPlace("p8");

// created -| t1 |-> p2

Transition location = addTransition("location");

addArc(p1, location);

addArc(location, p2);

// p2 -| filter |-> p3

Transition filter = addTransition("filter");

addArc(p2, filter);

addArc(filter, p3);

// p2 -| compress |-> p4

Transition compress = addTransition("compress");

addArc(p2, compress);

addArc(compress, p4);

// p2 -| curation |-> p5

Transition curation = addTransition("curation");

addArc(p2, curation);

addArc(curation, p5);

// p3 -| t1 |-> p6

Transition t1 = addTransition("t1");

addArc(p3, t1);

addArc(t1, p6);

// p4 -| t2 |-> p6

Transition t2 = addTransition("t2");

addArc(p4, t2);

addArc(t2, p6);

// p5 -| t3 |-> p6

Transition t3 = addTransition("t3");

addArc(p5, t3);

addArc(t3, p6);

// p6 -| compute |-> p7

Transition compute = addTransition("compute");

addArc(p6, compute);

addArc(compute, p7);

// p7 -| heat_plus |-> p8

Transition heat_plus = addTransition("heat_plus");

addArc(p7, heat_plus);

addArc(heat_plus, p8);

// p7 -| heat_moins |-> p8

Transition heat_moins = addTransition("heat_moins");

addArc(p7, heat_moins);

addArc(heat_moins, p8);

// p8 -| store |-> p9

Transition store = addTransition("store");

addArc(p8, store);

addArc(store, p9);

}

}

File Co2Client.java:

package org.inria.activedata.examples.CO2;

import org.inria.activedata.model.AbstractTransition;

import org.inria.activedata.runtime.client.ActiveDataClient;

import org.inria.activedata.runtime.client.ActiveDataClientDriver;

import org.inria.activedata.runtime.communication.rmi.RMIDriver;

/**

* The Co2 example illustrates how: - build a model consisting of nine places and 11 transitions -

* code a handler and a client to react to transitions - publish transition using the command line

*

**/

public class Co2Client {

private static void usage() {

System.err.println(

"Usage: java -cp active-data-lib-xxx.jar org.inria.activedata.examples.CO2.Co2Client ");

System.exit(42);

}

public static void main(String[] args) {

// Instantiate the Active Data client and the models

System.out.print("Connecting to Active Data service... ");

ActiveDataClient client = null;

try {

ActiveDataClientDriver driver = new RMIDriver("localhost", 1200);

driver.connect();

ActiveDataClient.init(driver);

client = ActiveDataClient.getInstance();

client.setPullDelay(2000);

client.start();

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(1);

}

System.out.println("OK");

// Setup the Co2 handler

System.out.print("Subscribing to transition... ");

// create a model and place a first token called my_name

Co2Model model = new Co2Model();

try {

client.createAndPublishLifeCycle(model, "my_name");

} catch (Exception e) {

System.out.println("cannot create the token");

}

// Retreive the transition we want to subscribe

AbstractTransition thw_location = model.getTransition("Co2.location");

// Install the corresponding handler

try {

client.subscribeTo(thw_location, new Co2Handler());

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(2);

}

// Retreive the transition we want to subscribe

AbstractTransition thw_filter = model.getTransition("Co2.filter");

// Install the corresponding handler

try {

client.subscribeTo(thw_filter, new Co2Handler());

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(2);

}

// Retreive the transition we want to subscribe

AbstractTransition thw_compress = model.getTransition("Co2.compress");

// Install the corresponding handler

try {

client.subscribeTo(thw_compress, new Co2Handler());

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(2);

}

// Retreive the transition we want to subscribe

AbstractTransition thw_curation = model.getTransition("Co2.curation");

// Install the corresponding handler

try {

client.subscribeTo(thw_curation, new Co2Handler());

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(2);

}

// Retreive the transition we want to subscribe

AbstractTransition thw_t1 = model.getTransition("Co2.t1");

// Install the corresponding handler

try {

client.subscribeTo(thw_t1, new Co2Handler());

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(2);

}

// Retreive the transition we want to subscribe

AbstractTransition thw_t2 = model.getTransition("Co2.t2");

// Install the corresponding handler

try {

client.subscribeTo(thw_t2, new Co2Handler());

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(2);

}

// Retreive the transition we want to subscribe

AbstractTransition thw_t3 = model.getTransition("Co2.t3");

// Install the corresponding handler

try {

client.subscribeTo(thw_t3, new Co2Handler());

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(2);

}

// Retreive the transition we want to subscribe

AbstractTransition thw_compute = model.getTransition("Co2.compute");

// Install the corresponding handler

try {

client.subscribeTo(thw_compute, new Co2Handler());

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(2);

}

// Retreive the transition we want to subscribe

AbstractTransition thw_heat_plus = model.getTransition("Co2.heat_plus");

// Install the corresponding handler

try {

client.subscribeTo(thw_heat_plus, new Co2Handler());

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(2);

}

// Retreive the transition we want to subscribe

AbstractTransition thw_heat_moins = model.getTransition("Co2.heat_moins");

// Install the corresponding handler

try {

client.subscribeTo(thw_heat_moins, new Co2Handler());

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(2);

}

// Retreive the transition we want to subscribe

AbstractTransition thw_store = model.getTransition("Co2.store");

// Install the corresponding handler

try {

client.subscribeTo(thw_store, new Co2Handler());

} catch (Exception e) {

System.out.println("Error");

e.printStackTrace();

System.exit(2);

}

System.out.println("OK...!");

}

}

File Co2Handler.java:

package org.inria.activedata.examples.CO2;

import java.util.ArrayList;

import org.graalvm.polyglot.*;

import org.inria.activedata.model.AbstractTransition;

import org.inria.activedata.model.Token;

import org.inria.activedata.runtime.client.ActiveDataClient;

import org.inria.activedata.runtime.client.TransitionHandler;

//

// This file use the polyglot facilities of GrallVM

// (https://www.graalvm.org) to interface different

// programming languages.

//

// The polyglot interface is described on different

// sites, among them:

// - https://www.graalvm.org/reference-manual/embed-languages/

// - https://docs.oracle.com/en/graalvm/enterprise/20/sdk/org/graalvm/polyglot/Value.html

//

// Note: use google-java-format to format the java code as follows:

// java -jar

// /home/cerin/Bureau/google-java-format/core/target/google-java-format-1.10-SNAPSHOT-all-deps.jar

// Co2Handler.java > Co2Handler1.java

//

public class Co2Handler implements TransitionHandler {

public static final String MSG = "Hello token ";

public static ArrayList MyArray = new ArrayList();

public static ArrayList MyArray1 = new ArrayList();

public static Value array1;

private ActiveDataClient client;

public void Co2Handler() {

client = ActiveDataClient.getInstance();

}

@Override

public void handler(

AbstractTransition transition, boolean isLocal, Token[] inTokens, Token[] outTokens) {

// System.out.println(MSG + inTokens[0].getUid());

switch (transition.getFullName()) {

case "Co2.Create":

System.out.println("Transition create");

break;

case "Co2.location":

System.out.println("Transition location");

Context polyglot = Context.create();

Value array = polyglot.eval("js", "[0,1,2,3,4,5,6,7,8,9]");

int result = array.getArrayElement(2).asInt();

for (int i = 0; i < array.getArraySize(); i++)

MyArray.add(array.getArrayElement(i).asInt() * result);

System.out.println("Initial data set produced by a Javascript code: " + MyArray.toString());

break;

case "Co2.filter":

System.out.println("Transition filter");

System.out.println("Data set produced: " + MyArray.toString());

break;

case "Co2.compress":

System.out.println("Transition compress");

break;

case "Co2.curation":

System.out.println("Transition curation");

Context polyglot1 = Context.create();

array1 = polyglot1.eval("python", MyArray.toString() + "[1:7]");

System.out.println("Data set produced by a Python code: " + array1.toString());

break;

case "Co2.t1":

System.out.println("Transition t1");

break;

case "Co2.t2":

System.out.println("Transition t2");

break;

case "Co2.t3":

System.out.println("Transition t3");

break;

case "Co2.compute":

System.out.println("Transition compute");

try {

long i = array1.getArraySize();

Context polyglot2 = Context.create();

Value x = polyglot2.eval("ruby", array1.toString() + ".sum");

System.out.println("Data set produced by a Ruby code is the sum: " + x.toString());

} catch (Exception e) {

System.out.println("Data set produced by a Ruby code is the sum equal to zero");

} finally {

break;

}

case "Co2.heat_plus":

System.out.println("Transition heat_plus");

break;

case "Co2.heat_moins":

System.out.println("Transition heat_moins");

break;

case "Co2.store":

System.out.println("Transition store");

break;

default:

System.out.println("Unknow transition");

break;

}

}

}

File DefaultTransitionDealer.java for a verbose mode:

package org.inria.activedata.model; import java.util.Map; import java.util.Iterator; /** * Implements the default behavior for a transition, which is taking * the appropriate number of tokens from the input place, and puting * the appropriate number of tokens on the output place.

* The way this implementation picks input tokens is not documented * and as such no assumption should ever be made about which particular * token will be consumed.

* When the number of produced tokens is greater than the number of * consumed tokens, new replicas are created. */ public class DefaultTransitionDealer extends TransitionDealer { private static final long serialVersionUID = 1560017850206649500 L; @Override public void doDeal(LifeCycle lc, Transition t) { // Traverse the places in the life cycle for (Place p: lc.getModel().getPlaces()) { System.out.printf("Life cycle places %s%n", p.toString()); } // Traverse the Transitions for (Map.Entry < String, AbstractTransition > T: lc.getModel().getTransitions().entrySet()) { System.out.println("Transition is defined by Key = " + T.getKey() + " -- Value = " + T.getValue()); // Traverse the tokens for Transision T for (PreArc a: T.getValue().getPreArcs()) { for (int i = 0; i < a.getArity(); i++) { System.out.printf(" Token is: %s%n", lc.getTokens(a.getStart().getFullName()).values()); System.out.printf(" Predecessor place: %s%n", a.getStart()); } } // Traverse the tokens for Transision T for (PostArc a: T.getValue().getPostArcs()) { for (int i = 0; i < a.getArity(); i++) { //System.out.printf("BONJOUR%n"); //System.out.printf(" Token is: %s%n",lc.getTokens(a.getStart().getFullName()).values()); System.out.printf(" Successor place: %s%n", a.getEnd()); } } } // Pick some tokens to consume for (PreArc a: t.preArcs) { Iterator < Token > preTokens = lc.getTokens(a.getStart().getFullName()).values().iterator(); for (int i = 0; i < a.getArity(); i++) { consume(preTokens.next()); System.out.printf("Consume token number %d from place %s%n", i + 1, a.getStart().getFullName()); } } // Produce some tokens (taken from the consumed set) Iterator < Token > iConsumed = getConsumed().iterator(); for (PostArc a: t.postArcs) { int produced = 0; // Start by moving tokens around while (produced < a.getArity() && iConsumed.hasNext()) { produce(iConsumed.next(), a.getEnd()); produced++; } // Comment by Christophe Cerin //System.out.printf("Produced %d token(s)%n",produced); Token source = getConsumed().iterator().next(); while (produced >= a.getArity()) { Token newToken = new Token(lc, source.getSid(), source.getUid(), a.getEnd()); //System.out.printf("source.getSid: %s ; source.getUid: %s%n",source.getSid(), source.getUid()); produceNewToken(newToken, a.getEnd()); System.out.printf("ProduceNewToken() call => %s %n", a.getEnd()); produced--; } } } }

The 'new' build.xml file to compile the ActiveData source files with the GraalVM frontend is:

<?xml version="1.0" encoding="utf-8"?>

<project name="ActiveData" default="compile" basedir=".">

<description>Active Data Ant build file</description>

<!--

===================================================================

SETTINGS : project classpath

===================================================================

-->

<property name="version" value="0.2.0" />

<property name="src" location="src"/>

<property name="bin" location="bin"/>

<property name="lib" location="lib"/>

<property name="dist" location="dist"/>

<property name="doc" location="doc"/>

<property name="jar" location="${dist}/active-data-lib-${version}.jar"/>

<target name="init" description="Create a bunch of necessary directories">

<mkdir dir="${bin}"/>

<mkdir dir="${lib}"/>

<mkdir dir="${dist}"/>

<mkdir dir="${doc}"/>

</target>

<!--

<target name="compile" description="Compile Java sources" depends="init, dependencies">

<javac compiler="modern" srcdir="${src}" debug="on" destdir="${bin}" target="1.6"

source="1.6" classpath="${project.classpath}" includeantruntime="no"/>

</target>

-->

<!--

Change the absolute PATH here to point to your GraalVM install

-->

<property name="JDK.dir" location="/home/cerin/Bureau/graalvm-ce-java11-20.2.0" />

<property name="javac11" location="${JDK.dir}/bin/javac" />

<target name="compile" description="Compile Java sources" depends="init, dependencies">

<javac executable="${javac11}"

fork="yes"

includeantruntime="false"

srcdir="${src}" debug="on" destdir="${bin}"

classpath="${project.classpath}"

/>

</target>

<!--

===================================================================

TARGET : download dependencies

===================================================================

-->

<target name="dependencies" depends="init">

<get src="http://search.maven.org/remotecontent?filepath=junit/junit/4.10/junit-4.10.jar" dest="${lib}/junit-4.10.jar" usetimestamp="true" skipexisting="true"/>

<get src="https://repo1.maven.org/maven2/javax/mail/mail/1.4.5/mail-1.4.5.jar" dest="${lib}/mail-1.4.5.jar" usetimestamp="true" skipexisting="true"/>

<get src="https://repo1.maven.org/maven2/org/twitter4j/twitter4j-core/4.0.7/twitter4j-core-4.0.7.jar" dest="${lib}/twitter4j-core-4.0.7.jar" usetimestamp="true" skipexisting="true"/>

<path id="id.project.classpath">

<pathelement location="${bin}" />

<fileset dir="${lib}">

<include name="**/*.jar"/>

</fileset>

</path>

<property name="project.classpath" refid="id.project.classpath"/>

</target>

<!--

===================================================================

TARGET : prepare a new release

===================================================================

-->

<target name="dist" description="Generate the distribution" depends="clean, unit-tests, jar, tar, javadoc"/>

<target name="tar">

<tar basedir="." destfile="${dist}/active-data-src-${version}.tar.gz"

excludes=".gitignore, *.git/, .classpath, .project, .settings/, *~, bin/, lib/, dist/, doc/, www/, xp_engine/,

TODO, src/org/inria/activedata/model/builder/">

</tar>

</target>

<!--

===================================================================

TARGET : build the library jar

===================================================================

-->

<target name="jar" depends="compile">

<jar destfile="${jar}">

<fileset dir="${bin}">

<exclude name="**/org/inria/activedata/model/builder/**" />

</fileset>

<fileset file="server.policy"/>

<manifest>

<attribute name="Main-Class" value="org.inria.activedata.examples.cmdline.RunService" />

</manifest>

</jar>

</target>

<!--

===================================================================

TARGET : clean

===================================================================

-->

<target name="clean" description="Cleanup">

<delete includeemptydirs="yes" quiet="yes">

<fileset dir="${bin}" includes="**/*"/>

<fileset dir="${dist}" includes="**/*"/>

<fileset dir="${doc}" includes="**/*"/>

</delete>

</target>

<!--

===================================================================

TARGET : generate the JavaDoc

===================================================================

-->

<target name="javadoc" depends="dev-javadoc, api-javadoc"/>

<target name="dev-javadoc" description="Full documentation (including private and protected members)." depends="dependencies">

<javadoc destdir="${doc}/dev"

author="true"

version="true"

use="true"

access="private"

Locale="en_US"

windowtitle="ActiveData developer documentation"

classpathref="id.project.classpath">

<packageset dir="src" defaultexcludes="yes">

<include name="org/inria/activedata/**"/>

<exclude name="org/inria/activedata/test"/>

<exclude name="org/inria/activedata/examples"/>

<exclude name="org/inria/activedata/model/builder"/>

</packageset>

</javadoc>

</target>

<target name="api-javadoc" description="API documentation" depends="dependencies">

<javadoc destdir="${doc}/api"

author="true"

version="true"

use="true"

access="public"

Locale="en_US"

windowtitle="ActiveData API"

classpathref="id.project.classpath">

<packageset dir="src" defaultexcludes="yes">

<include name="org/inria/activedata/**"/>

<exclude name="org/inria/activedata/test"/>

<exclude name="org/inria/activedata/examples"/>

<exclude name="org/inria/activedata/model/builder"/>

</packageset>

</javadoc>

</target>

<!--

===================================================================

TARGET : run the junit test cases

===================================================================

-->

<target name="unit-tests" description="Unit tests" depends="compile">

<junit haltonfailure="yes" clonevm="true">

<classpath>

<pathelement path="${project.classpath}"/>

</classpath>

<test name="org.inria.activedata.test.AllTests"/>

<formatter type="plain" usefile="false"/>

<permissions>

<grant class="java.net.SocketPermission" name="*:1000-65535" actions="connect,accept,listen,resolve"/>

<grant class="java.security.AllPermission"/>

</permissions>

</junit>

</target>

<target name="install" description="Install jar into Maven local repository" depends="jar">

<exec executable="mvn">

<arg value="install:install-file" />

<arg value="-Dfile=${jar}" />

<arg value="-DgroupId=org.inria.activedata" />

<arg value="-DartifactId=active-data-lib" />

<arg value="-Dversion=${version}" />

<arg value="-Dpackaging=jar" />

</exec>

</target>

</project>

Christophe Cérin

christophe.cerin@univ-paris13.fr

December 6, 2020